dino-0.1.0/�����������������������������������������������������������������������������������������0000755�0000000�0000000�00000000000�13614354364�011150� 5����������������������������������������������������������������������������������������������������ustar �root����������������������������root�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������dino-0.1.0/.gitmodules������������������������������������������������������������������������������0000644�0000000�0000000�00000000253�13614354364�013325� 0����������������������������������������������������������������������������������������������������ustar �root����������������������������root�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������[submodule "libsignal-protocol-c"]

path = plugins/signal-protocol/libsignal-protocol-c

url = https://github.com/WhisperSystems/libsignal-protocol-c.git

branch = v2.3.2

�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������dino-0.1.0/CMakeLists.txt���������������������������������������������������������������������������0000644�0000000�0000000�00000021531�13614354364�013712� 0����������������������������������������������������������������������������������������������������ustar �root����������������������������root�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������cmake_minimum_required(VERSION 3.3)

list(APPEND CMAKE_MODULE_PATH ${CMAKE_SOURCE_DIR}/cmake)

include(ComputeVersion)

if (NOT VERSION_FOUND)

project(Dino LANGUAGES C)

elseif (VERSION_IS_RELEASE)

project(Dino VERSION ${VERSION_FULL} LANGUAGES C)

else ()

project(Dino LANGUAGES C)

set(PROJECT_VERSION ${VERSION_FULL})

endif ()

# Prepare Plugins

set(DEFAULT_PLUGINS omemo;openpgp;http-files)

foreach (plugin ${DEFAULT_PLUGINS})

if ("$CACHE{DINO_PLUGIN_ENABLED_${plugin}}" STREQUAL "")

if (NOT DEFINED DINO_PLUGIN_ENABLED_${plugin}})

set(DINO_PLUGIN_ENABLED_${plugin} "yes" CACHE BOOL "Enable plugin ${plugin}")

else ()

set(DINO_PLUGIN_ENABLED_${plugin} "${DINO_PLUGIN_ENABLED_${plugin}}" CACHE BOOL "Enable plugin ${plugin}" FORCE)

endif ()

if (DINO_PLUGIN_ENABLED_${plugin})

message(STATUS "Enabled plugin: ${plugin}")

else ()

message(STATUS "Disabled plugin: ${plugin}")

endif ()

endif ()

endforeach (plugin)

if (DISABLED_PLUGINS)

foreach(plugin ${DISABLED_PLUGINS})

set(DINO_PLUGIN_ENABLED_${plugin} "no" CACHE BOOL "Enable plugin ${plugin}" FORCE)

message(STATUS "Disabled plugin: ${plugin}")

endforeach(plugin)

endif (DISABLED_PLUGINS)

if (ENABLED_PLUGINS)

foreach(plugin ${ENABLED_PLUGINS})

set(DINO_PLUGIN_ENABLED_${plugin} "yes" CACHE BOOL "Enable plugin ${plugin}" FORCE)

message(STATUS "Enabled plugin: ${plugin}")

endforeach(plugin)

endif (ENABLED_PLUGINS)

set(PLUGINS "")

get_cmake_property(all_variables VARIABLES)

foreach (variable_name ${all_variables})

if (variable_name MATCHES "^DINO_PLUGIN_ENABLED_(.+)$" AND ${variable_name})

list(APPEND PLUGINS ${CMAKE_MATCH_1})

endif()

endforeach ()

list(SORT PLUGINS)

string(REPLACE ";" ", " PLUGINS_TEXT "${PLUGINS}")

message(STATUS "Configuring Dino ${PROJECT_VERSION} with plugins: ${PLUGINS_TEXT}")

# Prepare instal paths

macro(set_path what val desc)

if (NOT ${what})

unset(${what} CACHE)

set(${what} ${val})

endif ()

if (NOT "${${what}}" STREQUAL "${_${what}_SET}")

message(STATUS "${desc}: ${${what}}")

set(_${what}_SET ${${what}} CACHE INTERNAL ${desc})

endif()

endmacro(set_path)

string(REGEX REPLACE "^liblib" "lib" LIBDIR_NAME "lib${LIB_SUFFIX}")

set_path(CMAKE_INSTALL_PREFIX "${CMAKE_INSTALL_PREFIX}" "Installation directory for architecture-independent files")

set_path(EXEC_INSTALL_PREFIX "${CMAKE_INSTALL_PREFIX}" "Installation directory for architecture-dependent files")

set_path(SHARE_INSTALL_PREFIX "${CMAKE_INSTALL_PREFIX}/share" "Installation directory for read-only architecture-independent data")

set_path(BIN_INSTALL_DIR "${EXEC_INSTALL_PREFIX}/bin" "Installation directory for user executables")

set_path(DATA_INSTALL_DIR "${SHARE_INSTALL_PREFIX}/dino" "Installation directory for dino-specific data")

set_path(APPDATA_FILE_INSTALL_DIR "${SHARE_INSTALL_PREFIX}/metainfo" "Installation directory for .appdata.xml files")

set_path(DESKTOP_FILE_INSTALL_DIR "${SHARE_INSTALL_PREFIX}/applications" "Installation directory for .desktop files")

set_path(SERVICE_FILE_INSTALL_DIR "${SHARE_INSTALL_PREFIX}/dbus-1/services" "Installation directory for .service files")

set_path(ICON_INSTALL_DIR "${SHARE_INSTALL_PREFIX}/icons" "Installation directory for icons")

set_path(INCLUDE_INSTALL_DIR "${EXEC_INSTALL_PREFIX}/include" "Installation directory for C header files")

set_path(LIB_INSTALL_DIR "${EXEC_INSTALL_PREFIX}/${LIBDIR_NAME}" "Installation directory for object code libraries")

set_path(LOCALE_INSTALL_DIR "${SHARE_INSTALL_PREFIX}/locale" "Installation directory for locale files")

set_path(PLUGIN_INSTALL_DIR "${LIB_INSTALL_DIR}/dino/plugins" "Installation directory for dino plugin object code files")

set_path(VAPI_INSTALL_DIR "${SHARE_INSTALL_PREFIX}/vala/vapi" "Installation directory for Vala API files")

set(TARGET_INSTALL LIBRARY DESTINATION ${LIB_INSTALL_DIR} RUNTIME DESTINATION ${BIN_INSTALL_DIR} PUBLIC_HEADER DESTINATION ${INCLUDE_INSTALL_DIR} ARCHIVE DESTINATION ${LIB_INSTALL_DIR})

set(PLUGIN_INSTALL LIBRARY DESTINATION ${PLUGIN_INSTALL_DIR} RUNTIME DESTINATION ${PLUGIN_INSTALL_DIR})

include(CheckCCompilerFlag)

include(CheckCSourceCompiles)

macro(AddCFlagIfSupported list flag)

string(REGEX REPLACE "[^a-z^A-Z^_^0-9]+" "_" flag_name ${flag})

check_c_compiler_flag(${flag} COMPILER_SUPPORTS${flag_name})

if (${COMPILER_SUPPORTS${flag_name}})

set(${list} "${${list}} ${flag}")

endif ()

endmacro()

if ("Ninja" STREQUAL ${CMAKE_GENERATOR})

AddCFlagIfSupported(CMAKE_C_FLAGS -fdiagnostics-color)

endif ()

# Flags for all C files

AddCFlagIfSupported(CMAKE_C_FLAGS -Wall)

AddCFlagIfSupported(CMAKE_C_FLAGS -Wextra)

AddCFlagIfSupported(CMAKE_C_FLAGS -Werror=format-security)

AddCFlagIfSupported(CMAKE_C_FLAGS -Wno-duplicate-decl-specifier)

if (NOT VALA_WARN)

set(VALA_WARN "conversion")

endif ()

set(VALA_WARN "${VALA_WARN}" CACHE STRING "Which warnings to show when invoking C compiler on Vala compiler output")

set_property(CACHE VALA_WARN PROPERTY STRINGS "all;unused;qualifier;conversion;deprecated;format;none")

# Vala generates some unused stuff

if (NOT ("all" IN_LIST VALA_WARN OR "unused" IN_LIST VALA_WARN))

AddCFlagIfSupported(VALA_CFLAGS -Wno-unused-but-set-variable)

AddCFlagIfSupported(VALA_CFLAGS -Wno-unused-function)

AddCFlagIfSupported(VALA_CFLAGS -Wno-unused-label)

AddCFlagIfSupported(VALA_CFLAGS -Wno-unused-parameter)

AddCFlagIfSupported(VALA_CFLAGS -Wno-unused-value)

AddCFlagIfSupported(VALA_CFLAGS -Wno-unused-variable)

endif ()

if (NOT ("all" IN_LIST VALA_WARN OR "qualifier" IN_LIST VALA_WARN))

AddCFlagIfSupported(VALA_CFLAGS -Wno-discarded-qualifiers)

AddCFlagIfSupported(VALA_CFLAGS -Wno-discarded-array-qualifiers)

endif ()

if (NOT ("all" IN_LIST VALA_WARN OR "deprecated" IN_LIST VALA_WARN))

AddCFlagIfSupported(VALA_CFLAGS -Wno-deprecated-declarations)

endif ()

if (NOT ("all" IN_LIST VALA_WARN OR "format" IN_LIST VALA_WARN))

AddCFlagIfSupported(VALA_CFLAGS -Wno-missing-braces)

endif ()

if (NOT ("all" IN_LIST VALA_WARN OR "conversion" IN_LIST VALA_WARN))

AddCFlagIfSupported(VALA_CFLAGS -Wno-int-conversion)

AddCFlagIfSupported(VALA_CFLAGS -Wno-pointer-sign)

AddCFlagIfSupported(VALA_CFLAGS -Wno-incompatible-pointer-types)

endif ()

try_compile(__WITHOUT_FILE_OFFSET_BITS_64 ${CMAKE_CURRENT_BINARY_DIR} ${CMAKE_SOURCE_DIR}/cmake/LargeFileOffsets.c COMPILE_DEFINITIONS ${CMAKE_REQUIRED_DEFINITIONS})

if (NOT __WITHOUT_FILE_OFFSET_BITS_64)

try_compile(__WITH_FILE_OFFSET_BITS_64 ${CMAKE_CURRENT_BINARY_DIR} ${CMAKE_SOURCE_DIR}/cmake/LargeFileOffsets.c COMPILE_DEFINITIONS ${CMAKE_REQUIRED_DEFINITIONS} -D_FILE_OFFSET_BITS=64)

if (__WITH_FILE_OFFSET_BITS_64)

AddCFlagIfSupported(CMAKE_C_FLAGS -D_FILE_OFFSET_BITS=64)

message(STATUS "Enabled large file support using _FILE_OFFSET_BITS=64")

else (__WITH_FILE_OFFSET_BITS_64)

message(STATUS "Large file support not available")

endif (__WITH_FILE_OFFSET_BITS_64)

unset(__WITH_FILE_OFFSET_BITS_64)

endif (NOT __WITHOUT_FILE_OFFSET_BITS_64)

unset(__WITHOUT_FILE_OFFSET_BITS_64)

if ($ENV{USE_CCACHE})

# Configure CCache if available

find_program(CCACHE_BIN ccache)

mark_as_advanced(CCACHE_BIN)

if (CCACHE_BIN)

message(STATUS "Using ccache")

set_property(GLOBAL PROPERTY RULE_LAUNCH_COMPILE ${CCACHE_BIN})

set_property(GLOBAL PROPERTY RULE_LAUNCH_LINK ${CCACHE_BIN})

else (CCACHE_BIN)

message(STATUS "USE_CCACHE was set but ccache was not found")

endif (CCACHE_BIN)

endif ($ENV{USE_CCACHE})

if (NOT NO_DEBUG)

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -g")

set(CMAKE_VALA_FLAGS "${CMAKE_VALA_FLAGS} -g")

endif (NOT NO_DEBUG)

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${CMAKE_BINARY_DIR})

set(CMAKE_LIBRARY_OUTPUT_DIRECTORY ${CMAKE_BINARY_DIR})

set(GTK3_GLOBAL_VERSION 3.22)

set(GLib_GLOBAL_VERSION 2.38)

set(ICU_GLOBAL_VERSION 57)

if (NOT VALA_EXECUTABLE)

unset(VALA_EXECUTABLE CACHE)

endif ()

find_package(Vala 0.34 REQUIRED)

if (VALA_VERSION VERSION_GREATER "0.34.90" AND VALA_VERSION VERSION_LESS "0.36.1" OR # Due to a bug on 0.36.0 (and pre-releases), we need to disable FAST_VAPI

VALA_VERSION VERSION_EQUAL "0.44.10" OR VALA_VERSION VERSION_EQUAL "0.46.4" OR VALA_VERSION VERSION_EQUAL "0.47.1") # See Dino issue #646

set(DISABLE_FAST_VAPI yes)

endif ()

include(${VALA_USE_FILE})

include(MultiFind)

include(GlibCompileResourcesSupport)

set(CMAKE_VALA_FLAGS "${CMAKE_VALA_FLAGS} --target-glib=${GLib_GLOBAL_VERSION}")

add_subdirectory(qlite)

add_subdirectory(xmpp-vala)

add_subdirectory(libdino)

add_subdirectory(main)

add_subdirectory(plugins)

# uninstall target

configure_file("${CMAKE_SOURCE_DIR}/cmake/cmake_uninstall.cmake.in" "${CMAKE_BINARY_DIR}/cmake_uninstall.cmake" IMMEDIATE @ONLY)

add_custom_target(uninstall COMMAND ${CMAKE_COMMAND} -P ${CMAKE_BINARY_DIR}/cmake_uninstall.cmake COMMENT "Uninstall the project...")

�����������������������������������������������������������������������������������������������������������������������������������������������������������������������dino-0.1.0/LICENSE����������������������������������������������������������������������������������0000644�0000000�0000000�00000104512�13614354364�012160� 0����������������������������������������������������������������������������������������������������ustar �root����������������������������root������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������� GNU GENERAL PUBLIC LICENSE

Version 3, 29 June 2007

Copyright (C) 2007 Free Software Foundation, Inc.

Everyone is permitted to copy and distribute verbatim copies

of this license document, but changing it is not allowed.

Preamble

The GNU General Public License is a free, copyleft license for

software and other kinds of works.

The licenses for most software and other practical works are designed

to take away your freedom to share and change the works. By contrast,

the GNU General Public License is intended to guarantee your freedom to

share and change all versions of a program--to make sure it remains free

software for all its users. We, the Free Software Foundation, use the

GNU General Public License for most of our software; it applies also to

any other work released this way by its authors. You can apply it to

your programs, too.

When we speak of free software, we are referring to freedom, not

price. Our General Public Licenses are designed to make sure that you

have the freedom to distribute copies of free software (and charge for

them if you wish), that you receive source code or can get it if you

want it, that you can change the software or use pieces of it in new

free programs, and that you know you can do these things.

To protect your rights, we need to prevent others from denying you

these rights or asking you to surrender the rights. Therefore, you have

certain responsibilities if you distribute copies of the software, or if

you modify it: responsibilities to respect the freedom of others.

For example, if you distribute copies of such a program, whether

gratis or for a fee, you must pass on to the recipients the same

freedoms that you received. You must make sure that they, too, receive

or can get the source code. And you must show them these terms so they

know their rights.

Developers that use the GNU GPL protect your rights with two steps:

(1) assert copyright on the software, and (2) offer you this License

giving you legal permission to copy, distribute and/or modify it.

For the developers' and authors' protection, the GPL clearly explains

that there is no warranty for this free software. For both users' and

authors' sake, the GPL requires that modified versions be marked as

changed, so that their problems will not be attributed erroneously to

authors of previous versions.

Some devices are designed to deny users access to install or run

modified versions of the software inside them, although the manufacturer

can do so. This is fundamentally incompatible with the aim of

protecting users' freedom to change the software. The systematic

pattern of such abuse occurs in the area of products for individuals to

use, which is precisely where it is most unacceptable. Therefore, we

have designed this version of the GPL to prohibit the practice for those

products. If such problems arise substantially in other domains, we

stand ready to extend this provision to those domains in future versions

of the GPL, as needed to protect the freedom of users.

Finally, every program is threatened constantly by software patents.

States should not allow patents to restrict development and use of

software on general-purpose computers, but in those that do, we wish to

avoid the special danger that patents applied to a free program could

make it effectively proprietary. To prevent this, the GPL assures that

patents cannot be used to render the program non-free.

The precise terms and conditions for copying, distribution and

modification follow.

TERMS AND CONDITIONS

0. Definitions.

"This License" refers to version 3 of the GNU General Public License.

"Copyright" also means copyright-like laws that apply to other kinds of

works, such as semiconductor masks.

"The Program" refers to any copyrightable work licensed under this

License. Each licensee is addressed as "you". "Licensees" and

"recipients" may be individuals or organizations.

To "modify" a work means to copy from or adapt all or part of the work

in a fashion requiring copyright permission, other than the making of an

exact copy. The resulting work is called a "modified version" of the

earlier work or a work "based on" the earlier work.

A "covered work" means either the unmodified Program or a work based

on the Program.

To "propagate" a work means to do anything with it that, without

permission, would make you directly or secondarily liable for

infringement under applicable copyright law, except executing it on a

computer or modifying a private copy. Propagation includes copying,

distribution (with or without modification), making available to the

public, and in some countries other activities as well.

To "convey" a work means any kind of propagation that enables other

parties to make or receive copies. Mere interaction with a user through

a computer network, with no transfer of a copy, is not conveying.

An interactive user interface displays "Appropriate Legal Notices"

to the extent that it includes a convenient and prominently visible

feature that (1) displays an appropriate copyright notice, and (2)

tells the user that there is no warranty for the work (except to the

extent that warranties are provided), that licensees may convey the

work under this License, and how to view a copy of this License. If

the interface presents a list of user commands or options, such as a

menu, a prominent item in the list meets this criterion.

1. Source Code.

The "source code" for a work means the preferred form of the work

for making modifications to it. "Object code" means any non-source

form of a work.

A "Standard Interface" means an interface that either is an official

standard defined by a recognized standards body, or, in the case of

interfaces specified for a particular programming language, one that

is widely used among developers working in that language.

The "System Libraries" of an executable work include anything, other

than the work as a whole, that (a) is included in the normal form of

packaging a Major Component, but which is not part of that Major

Component, and (b) serves only to enable use of the work with that

Major Component, or to implement a Standard Interface for which an

implementation is available to the public in source code form. A

"Major Component", in this context, means a major essential component

(kernel, window system, and so on) of the specific operating system

(if any) on which the executable work runs, or a compiler used to

produce the work, or an object code interpreter used to run it.

The "Corresponding Source" for a work in object code form means all

the source code needed to generate, install, and (for an executable

work) run the object code and to modify the work, including scripts to

control those activities. However, it does not include the work's

System Libraries, or general-purpose tools or generally available free

programs which are used unmodified in performing those activities but

which are not part of the work. For example, Corresponding Source

includes interface definition files associated with source files for

the work, and the source code for shared libraries and dynamically

linked subprograms that the work is specifically designed to require,

such as by intimate data communication or control flow between those

subprograms and other parts of the work.

The Corresponding Source need not include anything that users

can regenerate automatically from other parts of the Corresponding

Source.

The Corresponding Source for a work in source code form is that

same work.

2. Basic Permissions.

All rights granted under this License are granted for the term of

copyright on the Program, and are irrevocable provided the stated

conditions are met. This License explicitly affirms your unlimited

permission to run the unmodified Program. The output from running a

covered work is covered by this License only if the output, given its

content, constitutes a covered work. This License acknowledges your

rights of fair use or other equivalent, as provided by copyright law.

You may make, run and propagate covered works that you do not

convey, without conditions so long as your license otherwise remains

in force. You may convey covered works to others for the sole purpose

of having them make modifications exclusively for you, or provide you

with facilities for running those works, provided that you comply with

the terms of this License in conveying all material for which you do

not control copyright. Those thus making or running the covered works

for you must do so exclusively on your behalf, under your direction

and control, on terms that prohibit them from making any copies of

your copyrighted material outside their relationship with you.

Conveying under any other circumstances is permitted solely under

the conditions stated below. Sublicensing is not allowed; section 10

makes it unnecessary.

3. Protecting Users' Legal Rights From Anti-Circumvention Law.

No covered work shall be deemed part of an effective technological

measure under any applicable law fulfilling obligations under article

11 of the WIPO copyright treaty adopted on 20 December 1996, or

similar laws prohibiting or restricting circumvention of such

measures.

When you convey a covered work, you waive any legal power to forbid

circumvention of technological measures to the extent such circumvention

is effected by exercising rights under this License with respect to

the covered work, and you disclaim any intention to limit operation or

modification of the work as a means of enforcing, against the work's

users, your or third parties' legal rights to forbid circumvention of

technological measures.

4. Conveying Verbatim Copies.

You may convey verbatim copies of the Program's source code as you

receive it, in any medium, provided that you conspicuously and

appropriately publish on each copy an appropriate copyright notice;

keep intact all notices stating that this License and any

non-permissive terms added in accord with section 7 apply to the code;

keep intact all notices of the absence of any warranty; and give all

recipients a copy of this License along with the Program.

You may charge any price or no price for each copy that you convey,

and you may offer support or warranty protection for a fee.

5. Conveying Modified Source Versions.

You may convey a work based on the Program, or the modifications to

produce it from the Program, in the form of source code under the

terms of section 4, provided that you also meet all of these conditions:

a) The work must carry prominent notices stating that you modified

it, and giving a relevant date.

b) The work must carry prominent notices stating that it is

released under this License and any conditions added under section

7. This requirement modifies the requirement in section 4 to

"keep intact all notices".

c) You must license the entire work, as a whole, under this

License to anyone who comes into possession of a copy. This

License will therefore apply, along with any applicable section 7

additional terms, to the whole of the work, and all its parts,

regardless of how they are packaged. This License gives no

permission to license the work in any other way, but it does not

invalidate such permission if you have separately received it.

d) If the work has interactive user interfaces, each must display

Appropriate Legal Notices; however, if the Program has interactive

interfaces that do not display Appropriate Legal Notices, your

work need not make them do so.

A compilation of a covered work with other separate and independent

works, which are not by their nature extensions of the covered work,

and which are not combined with it such as to form a larger program,

in or on a volume of a storage or distribution medium, is called an

"aggregate" if the compilation and its resulting copyright are not

used to limit the access or legal rights of the compilation's users

beyond what the individual works permit. Inclusion of a covered work

in an aggregate does not cause this License to apply to the other

parts of the aggregate.

6. Conveying Non-Source Forms.

You may convey a covered work in object code form under the terms

of sections 4 and 5, provided that you also convey the

machine-readable Corresponding Source under the terms of this License,

in one of these ways:

a) Convey the object code in, or embodied in, a physical product

(including a physical distribution medium), accompanied by the

Corresponding Source fixed on a durable physical medium

customarily used for software interchange.

b) Convey the object code in, or embodied in, a physical product

(including a physical distribution medium), accompanied by a

written offer, valid for at least three years and valid for as

long as you offer spare parts or customer support for that product

model, to give anyone who possesses the object code either (1) a

copy of the Corresponding Source for all the software in the

product that is covered by this License, on a durable physical

medium customarily used for software interchange, for a price no

more than your reasonable cost of physically performing this

conveying of source, or (2) access to copy the

Corresponding Source from a network server at no charge.

c) Convey individual copies of the object code with a copy of the

written offer to provide the Corresponding Source. This

alternative is allowed only occasionally and noncommercially, and

only if you received the object code with such an offer, in accord

with subsection 6b.

d) Convey the object code by offering access from a designated

place (gratis or for a charge), and offer equivalent access to the

Corresponding Source in the same way through the same place at no

further charge. You need not require recipients to copy the

Corresponding Source along with the object code. If the place to

copy the object code is a network server, the Corresponding Source

may be on a different server (operated by you or a third party)

that supports equivalent copying facilities, provided you maintain

clear directions next to the object code saying where to find the

Corresponding Source. Regardless of what server hosts the

Corresponding Source, you remain obligated to ensure that it is

available for as long as needed to satisfy these requirements.

e) Convey the object code using peer-to-peer transmission, provided

you inform other peers where the object code and Corresponding

Source of the work are being offered to the general public at no

charge under subsection 6d.

A separable portion of the object code, whose source code is excluded

from the Corresponding Source as a System Library, need not be

included in conveying the object code work.

A "User Product" is either (1) a "consumer product", which means any

tangible personal property which is normally used for personal, family,

or household purposes, or (2) anything designed or sold for incorporation

into a dwelling. In determining whether a product is a consumer product,

doubtful cases shall be resolved in favor of coverage. For a particular

product received by a particular user, "normally used" refers to a

typical or common use of that class of product, regardless of the status

of the particular user or of the way in which the particular user

actually uses, or expects or is expected to use, the product. A product

is a consumer product regardless of whether the product has substantial

commercial, industrial or non-consumer uses, unless such uses represent

the only significant mode of use of the product.

"Installation Information" for a User Product means any methods,

procedures, authorization keys, or other information required to install

and execute modified versions of a covered work in that User Product from

a modified version of its Corresponding Source. The information must

suffice to ensure that the continued functioning of the modified object

code is in no case prevented or interfered with solely because

modification has been made.

If you convey an object code work under this section in, or with, or

specifically for use in, a User Product, and the conveying occurs as

part of a transaction in which the right of possession and use of the

User Product is transferred to the recipient in perpetuity or for a

fixed term (regardless of how the transaction is characterized), the

Corresponding Source conveyed under this section must be accompanied

by the Installation Information. But this requirement does not apply

if neither you nor any third party retains the ability to install

modified object code on the User Product (for example, the work has

been installed in ROM).

The requirement to provide Installation Information does not include a

requirement to continue to provide support service, warranty, or updates

for a work that has been modified or installed by the recipient, or for

the User Product in which it has been modified or installed. Access to a

network may be denied when the modification itself materially and

adversely affects the operation of the network or violates the rules and

protocols for communication across the network.

Corresponding Source conveyed, and Installation Information provided,

in accord with this section must be in a format that is publicly

documented (and with an implementation available to the public in

source code form), and must require no special password or key for

unpacking, reading or copying.

7. Additional Terms.

"Additional permissions" are terms that supplement the terms of this

License by making exceptions from one or more of its conditions.

Additional permissions that are applicable to the entire Program shall

be treated as though they were included in this License, to the extent

that they are valid under applicable law. If additional permissions

apply only to part of the Program, that part may be used separately

under those permissions, but the entire Program remains governed by

this License without regard to the additional permissions.

When you convey a copy of a covered work, you may at your option

remove any additional permissions from that copy, or from any part of

it. (Additional permissions may be written to require their own

removal in certain cases when you modify the work.) You may place

additional permissions on material, added by you to a covered work,

for which you have or can give appropriate copyright permission.

Notwithstanding any other provision of this License, for material you

add to a covered work, you may (if authorized by the copyright holders of

that material) supplement the terms of this License with terms:

a) Disclaiming warranty or limiting liability differently from the

terms of sections 15 and 16 of this License; or

b) Requiring preservation of specified reasonable legal notices or

author attributions in that material or in the Appropriate Legal

Notices displayed by works containing it; or

c) Prohibiting misrepresentation of the origin of that material, or

requiring that modified versions of such material be marked in

reasonable ways as different from the original version; or

d) Limiting the use for publicity purposes of names of licensors or

authors of the material; or

e) Declining to grant rights under trademark law for use of some

trade names, trademarks, or service marks; or

f) Requiring indemnification of licensors and authors of that

material by anyone who conveys the material (or modified versions of

it) with contractual assumptions of liability to the recipient, for

any liability that these contractual assumptions directly impose on

those licensors and authors.

All other non-permissive additional terms are considered "further

restrictions" within the meaning of section 10. If the Program as you

received it, or any part of it, contains a notice stating that it is

governed by this License along with a term that is a further

restriction, you may remove that term. If a license document contains

a further restriction but permits relicensing or conveying under this

License, you may add to a covered work material governed by the terms

of that license document, provided that the further restriction does

not survive such relicensing or conveying.

If you add terms to a covered work in accord with this section, you

must place, in the relevant source files, a statement of the

additional terms that apply to those files, or a notice indicating

where to find the applicable terms.

Additional terms, permissive or non-permissive, may be stated in the

form of a separately written license, or stated as exceptions;

the above requirements apply either way.

8. Termination.

You may not propagate or modify a covered work except as expressly

provided under this License. Any attempt otherwise to propagate or

modify it is void, and will automatically terminate your rights under

this License (including any patent licenses granted under the third

paragraph of section 11).

However, if you cease all violation of this License, then your

license from a particular copyright holder is reinstated (a)

provisionally, unless and until the copyright holder explicitly and

finally terminates your license, and (b) permanently, if the copyright

holder fails to notify you of the violation by some reasonable means

prior to 60 days after the cessation.

Moreover, your license from a particular copyright holder is

reinstated permanently if the copyright holder notifies you of the

violation by some reasonable means, this is the first time you have

received notice of violation of this License (for any work) from that

copyright holder, and you cure the violation prior to 30 days after

your receipt of the notice.

Termination of your rights under this section does not terminate the

licenses of parties who have received copies or rights from you under

this License. If your rights have been terminated and not permanently

reinstated, you do not qualify to receive new licenses for the same

material under section 10.

9. Acceptance Not Required for Having Copies.

You are not required to accept this License in order to receive or

run a copy of the Program. Ancillary propagation of a covered work

occurring solely as a consequence of using peer-to-peer transmission

to receive a copy likewise does not require acceptance. However,

nothing other than this License grants you permission to propagate or

modify any covered work. These actions infringe copyright if you do

not accept this License. Therefore, by modifying or propagating a

covered work, you indicate your acceptance of this License to do so.

10. Automatic Licensing of Downstream Recipients.

Each time you convey a covered work, the recipient automatically

receives a license from the original licensors, to run, modify and

propagate that work, subject to this License. You are not responsible

for enforcing compliance by third parties with this License.

An "entity transaction" is a transaction transferring control of an

organization, or substantially all assets of one, or subdividing an

organization, or merging organizations. If propagation of a covered

work results from an entity transaction, each party to that

transaction who receives a copy of the work also receives whatever

licenses to the work the party's predecessor in interest had or could

give under the previous paragraph, plus a right to possession of the

Corresponding Source of the work from the predecessor in interest, if

the predecessor has it or can get it with reasonable efforts.

You may not impose any further restrictions on the exercise of the

rights granted or affirmed under this License. For example, you may

not impose a license fee, royalty, or other charge for exercise of

rights granted under this License, and you may not initiate litigation

(including a cross-claim or counterclaim in a lawsuit) alleging that

any patent claim is infringed by making, using, selling, offering for

sale, or importing the Program or any portion of it.

11. Patents.

A "contributor" is a copyright holder who authorizes use under this

License of the Program or a work on which the Program is based. The

work thus licensed is called the contributor's "contributor version".

A contributor's "essential patent claims" are all patent claims

owned or controlled by the contributor, whether already acquired or

hereafter acquired, that would be infringed by some manner, permitted

by this License, of making, using, or selling its contributor version,

but do not include claims that would be infringed only as a

consequence of further modification of the contributor version. For

purposes of this definition, "control" includes the right to grant

patent sublicenses in a manner consistent with the requirements of

this License.

Each contributor grants you a non-exclusive, worldwide, royalty-free

patent license under the contributor's essential patent claims, to

make, use, sell, offer for sale, import and otherwise run, modify and

propagate the contents of its contributor version.

In the following three paragraphs, a "patent license" is any express

agreement or commitment, however denominated, not to enforce a patent

(such as an express permission to practice a patent or covenant not to

sue for patent infringement). To "grant" such a patent license to a

party means to make such an agreement or commitment not to enforce a

patent against the party.

If you convey a covered work, knowingly relying on a patent license,

and the Corresponding Source of the work is not available for anyone

to copy, free of charge and under the terms of this License, through a

publicly available network server or other readily accessible means,

then you must either (1) cause the Corresponding Source to be so

available, or (2) arrange to deprive yourself of the benefit of the

patent license for this particular work, or (3) arrange, in a manner

consistent with the requirements of this License, to extend the patent

license to downstream recipients. "Knowingly relying" means you have

actual knowledge that, but for the patent license, your conveying the

covered work in a country, or your recipient's use of the covered work

in a country, would infringe one or more identifiable patents in that

country that you have reason to believe are valid.

If, pursuant to or in connection with a single transaction or

arrangement, you convey, or propagate by procuring conveyance of, a

covered work, and grant a patent license to some of the parties

receiving the covered work authorizing them to use, propagate, modify

or convey a specific copy of the covered work, then the patent license

you grant is automatically extended to all recipients of the covered

work and works based on it.

A patent license is "discriminatory" if it does not include within

the scope of its coverage, prohibits the exercise of, or is

conditioned on the non-exercise of one or more of the rights that are

specifically granted under this License. You may not convey a covered

work if you are a party to an arrangement with a third party that is

in the business of distributing software, under which you make payment

to the third party based on the extent of your activity of conveying

the work, and under which the third party grants, to any of the

parties who would receive the covered work from you, a discriminatory

patent license (a) in connection with copies of the covered work

conveyed by you (or copies made from those copies), or (b) primarily

for and in connection with specific products or compilations that

contain the covered work, unless you entered into that arrangement,

or that patent license was granted, prior to 28 March 2007.

Nothing in this License shall be construed as excluding or limiting

any implied license or other defenses to infringement that may

otherwise be available to you under applicable patent law.

12. No Surrender of Others' Freedom.

If conditions are imposed on you (whether by court order, agreement or

otherwise) that contradict the conditions of this License, they do not

excuse you from the conditions of this License. If you cannot convey a

covered work so as to satisfy simultaneously your obligations under this

License and any other pertinent obligations, then as a consequence you may

not convey it at all. For example, if you agree to terms that obligate you

to collect a royalty for further conveying from those to whom you convey

the Program, the only way you could satisfy both those terms and this

License would be to refrain entirely from conveying the Program.

13. Use with the GNU Affero General Public License.

Notwithstanding any other provision of this License, you have

permission to link or combine any covered work with a work licensed

under version 3 of the GNU Affero General Public License into a single

combined work, and to convey the resulting work. The terms of this

License will continue to apply to the part which is the covered work,

but the special requirements of the GNU Affero General Public License,

section 13, concerning interaction through a network will apply to the

combination as such.

14. Revised Versions of this License.

The Free Software Foundation may publish revised and/or new versions of

the GNU General Public License from time to time. Such new versions will

be similar in spirit to the present version, but may differ in detail to

address new problems or concerns.

Each version is given a distinguishing version number. If the

Program specifies that a certain numbered version of the GNU General

Public License "or any later version" applies to it, you have the

option of following the terms and conditions either of that numbered

version or of any later version published by the Free Software

Foundation. If the Program does not specify a version number of the

GNU General Public License, you may choose any version ever published

by the Free Software Foundation.

If the Program specifies that a proxy can decide which future

versions of the GNU General Public License can be used, that proxy's

public statement of acceptance of a version permanently authorizes you

to choose that version for the Program.

Later license versions may give you additional or different

permissions. However, no additional obligations are imposed on any

author or copyright holder as a result of your choosing to follow a

later version.

15. Disclaimer of Warranty.

THERE IS NO WARRANTY FOR THE PROGRAM, TO THE EXTENT PERMITTED BY

APPLICABLE LAW. EXCEPT WHEN OTHERWISE STATED IN WRITING THE COPYRIGHT

HOLDERS AND/OR OTHER PARTIES PROVIDE THE PROGRAM "AS IS" WITHOUT WARRANTY

OF ANY KIND, EITHER EXPRESSED OR IMPLIED, INCLUDING, BUT NOT LIMITED TO,

THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR

PURPOSE. THE ENTIRE RISK AS TO THE QUALITY AND PERFORMANCE OF THE PROGRAM

IS WITH YOU. SHOULD THE PROGRAM PROVE DEFECTIVE, YOU ASSUME THE COST OF

ALL NECESSARY SERVICING, REPAIR OR CORRECTION.

16. Limitation of Liability.

IN NO EVENT UNLESS REQUIRED BY APPLICABLE LAW OR AGREED TO IN WRITING

WILL ANY COPYRIGHT HOLDER, OR ANY OTHER PARTY WHO MODIFIES AND/OR CONVEYS

THE PROGRAM AS PERMITTED ABOVE, BE LIABLE TO YOU FOR DAMAGES, INCLUDING ANY

GENERAL, SPECIAL, INCIDENTAL OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE

USE OR INABILITY TO USE THE PROGRAM (INCLUDING BUT NOT LIMITED TO LOSS OF

DATA OR DATA BEING RENDERED INACCURATE OR LOSSES SUSTAINED BY YOU OR THIRD

PARTIES OR A FAILURE OF THE PROGRAM TO OPERATE WITH ANY OTHER PROGRAMS),

EVEN IF SUCH HOLDER OR OTHER PARTY HAS BEEN ADVISED OF THE POSSIBILITY OF

SUCH DAMAGES.

17. Interpretation of Sections 15 and 16.

If the disclaimer of warranty and limitation of liability provided

above cannot be given local legal effect according to their terms,

reviewing courts shall apply local law that most closely approximates

an absolute waiver of all civil liability in connection with the

Program, unless a warranty or assumption of liability accompanies a

copy of the Program in return for a fee.

END OF TERMS AND CONDITIONS

How to Apply These Terms to Your New Programs

If you develop a new program, and you want it to be of the greatest

possible use to the public, the best way to achieve this is to make it

free software which everyone can redistribute and change under these terms.

To do so, attach the following notices to the program. It is safest

to attach them to the start of each source file to most effectively

state the exclusion of warranty; and each file should have at least

the "copyright" line and a pointer to where the full notice is found.

Copyright (C)

This program is free software: you can redistribute it and/or modify

it under the terms of the GNU General Public License as published by

the Free Software Foundation, either version 3 of the License, or

(at your option) any later version.

This program is distributed in the hope that it will be useful,

but WITHOUT ANY WARRANTY; without even the implied warranty of

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

GNU General Public License for more details.

You should have received a copy of the GNU General Public License

along with this program. If not, see .

Also add information on how to contact you by electronic and paper mail.

If the program does terminal interaction, make it output a short

notice like this when it starts in an interactive mode:

Copyright (C)

This program comes with ABSOLUTELY NO WARRANTY; for details type `show w'.

This is free software, and you are welcome to redistribute it

under certain conditions; type `show c' for details.

The hypothetical commands `show w' and `show c' should show the appropriate

parts of the General Public License. Of course, your program's commands

might be different; for a GUI interface, you would use an "about box".

You should also get your employer (if you work as a programmer) or school,

if any, to sign a "copyright disclaimer" for the program, if necessary.

For more information on this, and how to apply and follow the GNU GPL, see

.

The GNU General Public License does not permit incorporating your program

into proprietary programs. If your program is a subroutine library, you

may consider it more useful to permit linking proprietary applications with

the library. If this is what you want to do, use the GNU Lesser General

Public License instead of this License. But first, please read

.��������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������dino-0.1.0/README.md��������������������������������������������������������������������������������0000644�0000000�0000000�00000003171�13614354364�012431� 0����������������������������������������������������������������������������������������������������ustar �root����������������������������root�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������

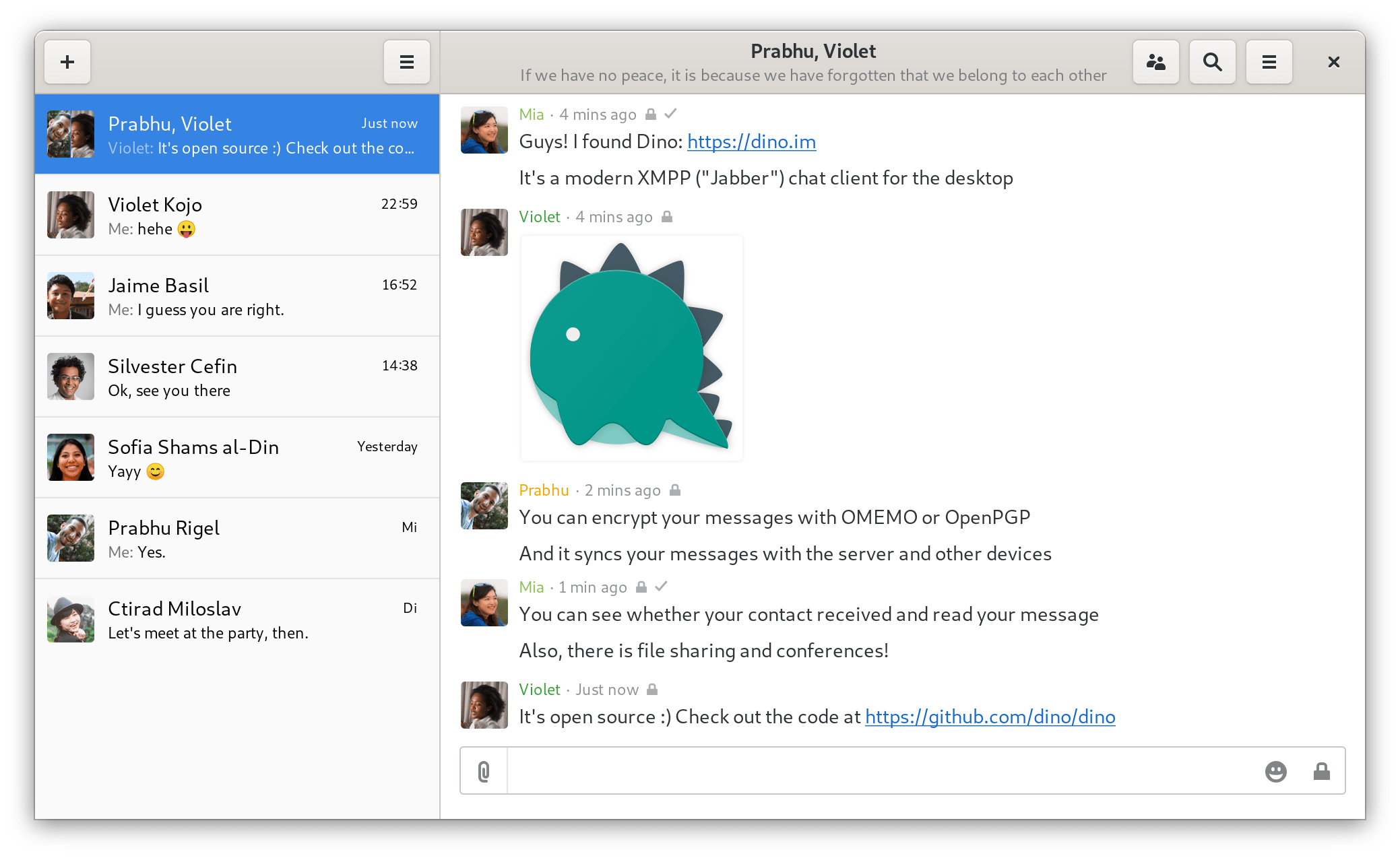

=======

Installation

------------

Have a look at the [prebuilt packages](https://github.com/dino/dino/wiki/Distribution-Packages).

Build

-----

Make sure to install all [dependencies](https://github.com/dino/dino/wiki/Build#dependencies).

./configure

make

build/dino

Resources

---------

- Check out the [Dino website](https://dino.im).

- Join our XMPP conference room at `chat@dino.im`.

- The [wiki](https://github.com/dino/dino/wiki) provides additional information.

Contribute

----------

- Pull requests are welcome. Please discuss bigger changes in the conference room first.

- Look at [how to debug](https://github.com/dino/dino/wiki/Debugging) Dino before you report a bug.

- Help [translating](https://hosted.weblate.org/projects/dino/) Dino into your language.

License

-------

Dino - Modern Jabber/XMPP Client using GTK+/Vala

Copyright (C) 2016-2020 Dino contributors

This program is free software: you can redistribute it and/or modify

it under the terms of the GNU General Public License as published by

the Free Software Foundation, either version 3 of the License, or

(at your option) any later version.

This program is distributed in the hope that it will be useful,

but WITHOUT ANY WARRANTY; without even the implied warranty of

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

GNU General Public License for more details.

You should have received a copy of the GNU General Public License

along with this program. If not, see .

�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������dino-0.1.0/VERSION����������������������������������������������������������������������������������0000644�0000000�0000000�00000000016�13614354364�012215� 0����������������������������������������������������������������������������������������������������ustar �root����������������������������root�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������RELEASE 0.1.0

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������dino-0.1.0/cmake/�����������������������������������������������������������������������������������0000755�0000000�0000000�00000000000�13614354364�012230� 5����������������������������������������������������������������������������������������������������ustar �root����������������������������root�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������dino-0.1.0/cmake/BuildTargetScript.cmake������������������������������������������������������������0000644�0000000�0000000�00000004443�13614354364�016632� 0����������������������������������������������������������������������������������������������������ustar �root����������������������������root�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������# This file is used to be invoked at build time. It generates the needed

# resource XML file.

# Input variables that need to provided when invoking this script:

# GXML_OUTPUT The output file path where to save the XML file.

# GXML_COMPRESS_ALL Sets all COMPRESS flags in all resources in resource

# list.

# GXML_NO_COMPRESS_ALL Removes all COMPRESS flags in all resources in

# resource list.

# GXML_STRIPBLANKS_ALL Sets all STRIPBLANKS flags in all resources in

# resource list.

# GXML_NO_STRIPBLANKS_ALL Removes all STRIPBLANKS flags in all resources in

# resource list.

# GXML_TOPIXDATA_ALL Sets all TOPIXDATA flags i nall resources in resource

# list.

# GXML_NO_TOPIXDATA_ALL Removes all TOPIXDATA flags in all resources in

# resource list.

# GXML_PREFIX Overrides the resource prefix that is prepended to

# each relative name in registered resources.

# GXML_RESOURCES The list of resource files. Whether absolute or

# relative path is equal.

# Include the GENERATE_GXML() function.

include(${CMAKE_CURRENT_LIST_DIR}/GenerateGXML.cmake)

# Set flags to actual invocation flags.

if(GXML_COMPRESS_ALL)

set(GXML_COMPRESS_ALL COMPRESS_ALL)

endif()

if(GXML_NO_COMPRESS_ALL)

set(GXML_NO_COMPRESS_ALL NO_COMPRESS_ALL)

endif()

if(GXML_STRIPBLANKS_ALL)

set(GXML_STRIPBLANKS_ALL STRIPBLANKS_ALL)

endif()

if(GXML_NO_STRIPBLANKS_ALL)

set(GXML_NO_STRIPBLANKS_ALL NO_STRIPBLANKS_ALL)

endif()

if(GXML_TOPIXDATA_ALL)

set(GXML_TOPIXDATA_ALL TOPIXDATA_ALL)

endif()

if(GXML_NO_TOPIXDATA_ALL)

set(GXML_NO_TOPIXDATA_ALL NO_TOPIXDATA_ALL)

endif()

# Replace " " with ";" to import the list over the command line. Otherwise

# CMake would interprete the passed resources as a whole string.

string(REPLACE " " ";" GXML_RESOURCES ${GXML_RESOURCES})

# Invoke the gresource XML generation function.

generate_gxml(${GXML_OUTPUT}

${GXML_COMPRESS_ALL} ${GXML_NO_COMPRESS_ALL}

${GXML_STRIPBLANKS_ALL} ${GXML_NO_STRIPBLANKS_ALL}

${GXML_TOPIXDATA_ALL} ${GXML_NO_TOPIXDATA_ALL}

PREFIX ${GXML_PREFIX}

RESOURCES ${GXML_RESOURCES})

�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������dino-0.1.0/cmake/CompileGResources.cmake������������������������������������������������������������0000644�0000000�0000000�00000024044�13614354364�016630� 0����������������������������������������������������������������������������������������������������ustar �root����������������������������root�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������include(CMakeParseArguments)

# Path to this file.

set(GCR_CMAKE_MACRO_DIR ${CMAKE_CURRENT_LIST_DIR})

# Compiles a gresource resource file from given resource files. Automatically

# creates the XML controlling file.

# The type of resource to generate (header, c-file or bundle) is automatically

# determined from TARGET file ending, if no TYPE is explicitly specified.

# The output file is stored in the provided variable "output".

# "xml_out" contains the variable where to output the XML path. Can be used to

# create custom targets or doing postprocessing.

# If you want to use preprocessing, you need to manually check the existence

# of the tools you use. This function doesn't check this for you, it just

# generates the XML file. glib-compile-resources will then throw a

# warning/error.

function(COMPILE_GRESOURCES output xml_out)

# Available options:

# COMPRESS_ALL, NO_COMPRESS_ALL Overrides the COMPRESS flag in all

# registered resources.

# STRIPBLANKS_ALL, NO_STRIPBLANKS_ALL Overrides the STRIPBLANKS flag in all

# registered resources.

# TOPIXDATA_ALL, NO_TOPIXDATA_ALL Overrides the TOPIXDATA flag in all

# registered resources.

set(CG_OPTIONS COMPRESS_ALL NO_COMPRESS_ALL

STRIPBLANKS_ALL NO_STRIPBLANKS_ALL

TOPIXDATA_ALL NO_TOPIXDATA_ALL)

# Available one value options:

# TYPE Type of resource to create. Valid options are:

# EMBED_C: A C-file that can be compiled with your project.

# EMBED_H: A header that can be included into your project.

# BUNDLE: Generates a resource bundle file that can be loaded

# at runtime.

# AUTO: Determine from target file ending. Need to specify

# target argument.

# PREFIX Overrides the resource prefix that is prepended to each

# relative file name in registered resources.

# SOURCE_DIR Overrides the resources base directory to search for resources.

# Normally this is set to the source directory with that CMake

# was invoked (CMAKE_SOURCE_DIR).

# TARGET Overrides the name of the output file/-s. Normally the output

# names from glib-compile-resources tool is taken.

set(CG_ONEVALUEARGS TYPE PREFIX SOURCE_DIR TARGET)

# Available multi-value options:

# RESOURCES The list of resource files. Whether absolute or relative path is

# equal, absolute paths are stripped down to relative ones. If the

# absolute path is not inside the given base directory SOURCE_DIR

# or CMAKE_SOURCE_DIR (if SOURCE_DIR is not overriden), this

# function aborts.

# OPTIONS Extra command line options passed to glib-compile-resources.

set(CG_MULTIVALUEARGS RESOURCES OPTIONS)

# Parse the arguments.

cmake_parse_arguments(CG_ARG

"${CG_OPTIONS}"

"${CG_ONEVALUEARGS}"

"${CG_MULTIVALUEARGS}"

"${ARGN}")

# Variable to store the double-quote (") string. Since escaping

# double-quotes in strings is not possible we need a helper variable that

# does this job for us.

set(Q \")

# Check invocation validity with the _UNPARSED_ARGUMENTS variable.

# If other not recognized parameters were passed, throw error.

if (CG_ARG_UNPARSED_ARGUMENTS)

set(CG_WARNMSG "Invocation of COMPILE_GRESOURCES with unrecognized")

set(CG_WARNMSG "${CG_WARNMSG} parameters. Parameters are:")

set(CG_WARNMSG "${CG_WARNMSG} ${CG_ARG_UNPARSED_ARGUMENTS}.")

message(WARNING ${CG_WARNMSG})

endif()

# Check invocation validity depending on generation mode (EMBED_C, EMBED_H

# or BUNDLE).

if ("${CG_ARG_TYPE}" STREQUAL "EMBED_C")

# EMBED_C mode, output compilable C-file.

set(CG_GENERATE_COMMAND_LINE "--generate-source")

set(CG_TARGET_FILE_ENDING "c")

elseif ("${CG_ARG_TYPE}" STREQUAL "EMBED_H")

# EMBED_H mode, output includable header file.

set(CG_GENERATE_COMMAND_LINE "--generate-header")

set(CG_TARGET_FILE_ENDING "h")

elseif ("${CG_ARG_TYPE}" STREQUAL "BUNDLE")

# BUNDLE mode, output resource bundle. Don't do anything since

# glib-compile-resources outputs a bundle when not specifying

# something else.

set(CG_TARGET_FILE_ENDING "gresource")

else()

# Everything else is AUTO mode, determine from target file ending.

if (CG_ARG_TARGET)

set(CG_GENERATE_COMMAND_LINE "--generate")

else()

set(CG_ERRMSG "AUTO mode given, but no target specified. Can't")

set(CG_ERRMSG "${CG_ERRMSG} determine output type. In function")

set(CG_ERRMSG "${CG_ERRMSG} COMPILE_GRESOURCES.")

message(FATAL_ERROR ${CG_ERRMSG})

endif()

endif()

# Check flag validity.

if (CG_ARG_COMPRESS_ALL AND CG_ARG_NO_COMPRESS_ALL)

set(CG_ERRMSG "COMPRESS_ALL and NO_COMPRESS_ALL simultaneously set. In")

set(CG_ERRMSG "${CG_ERRMSG} function COMPILE_GRESOURCES.")

message(FATAL_ERROR ${CG_ERRMSG})

endif()

if (CG_ARG_STRIPBLANKS_ALL AND CG_ARG_NO_STRIPBLANKS_ALL)

set(CG_ERRMSG "STRIPBLANKS_ALL and NO_STRIPBLANKS_ALL simultaneously")

set(CG_ERRMSG "${CG_ERRMSG} set. In function COMPILE_GRESOURCES.")

message(FATAL_ERROR ${CG_ERRMSG})

endif()

if (CG_ARG_TOPIXDATA_ALL AND CG_ARG_NO_TOPIXDATA_ALL)

set(CG_ERRMSG "TOPIXDATA_ALL and NO_TOPIXDATA_ALL simultaneously set.")

set(CG_ERRMSG "${CG_ERRMSG} In function COMPILE_GRESOURCES.")

message(FATAL_ERROR ${CG_ERRMSG})

endif()

# Check if there are any resources.

if (NOT CG_ARG_RESOURCES)

set(CG_ERRMSG "No resource files to process. In function")

set(CG_ERRMSG "${CG_ERRMSG} COMPILE_GRESOURCES.")

message(FATAL_ERROR ${CG_ERRMSG})

endif()

# Extract all dependencies for targets from resource list.

foreach(res ${CG_ARG_RESOURCES})

if (NOT(("${res}" STREQUAL "COMPRESS") OR

("${res}" STREQUAL "STRIPBLANKS") OR

("${res}" STREQUAL "TOPIXDATA")))

add_custom_command(

OUTPUT "${CMAKE_CURRENT_BINARY_DIR}/resources/${res}"

COMMAND ${CMAKE_COMMAND} -E copy "${CG_ARG_SOURCE_DIR}/${res}" "${CMAKE_CURRENT_BINARY_DIR}/resources/${res}"

MAIN_DEPENDENCY "${CG_ARG_SOURCE_DIR}/${res}")

list(APPEND CG_RESOURCES_DEPENDENCIES "${CMAKE_CURRENT_BINARY_DIR}/resources/${res}")

endif()

endforeach()

# Construct .gresource.xml path.

set(CG_XML_FILE_PATH "${CMAKE_CURRENT_BINARY_DIR}/resources/.gresource.xml")

# Generate gresources XML target.

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_OUTPUT=${Q}${CG_XML_FILE_PATH}${Q}")

if(CG_ARG_COMPRESS_ALL)

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_COMPRESS_ALL")

endif()

if(CG_ARG_NO_COMPRESS_ALL)

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_NO_COMPRESS_ALL")

endif()

if(CG_ARG_STRPIBLANKS_ALL)

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_STRIPBLANKS_ALL")

endif()

if(CG_ARG_NO_STRIPBLANKS_ALL)

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_NO_STRIPBLANKS_ALL")

endif()

if(CG_ARG_TOPIXDATA_ALL)

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_TOPIXDATA_ALL")

endif()

if(CG_ARG_NO_TOPIXDATA_ALL)

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_NO_TOPIXDATA_ALL")

endif()

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_PREFIX=${Q}${CG_ARG_PREFIX}${Q}")

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

list(APPEND CG_CMAKE_SCRIPT_ARGS

"GXML_RESOURCES=${Q}${CG_ARG_RESOURCES}${Q}")

list(APPEND CG_CMAKE_SCRIPT_ARGS "-P")

list(APPEND CG_CMAKE_SCRIPT_ARGS

"${Q}${GCR_CMAKE_MACRO_DIR}/BuildTargetScript.cmake${Q}")

get_filename_component(CG_XML_FILE_PATH_ONLY_NAME

"${CG_XML_FILE_PATH}" NAME)

set(CG_XML_CUSTOM_COMMAND_COMMENT

"Creating gresources XML file (${CG_XML_FILE_PATH_ONLY_NAME})")

add_custom_command(OUTPUT ${CG_XML_FILE_PATH}

COMMAND ${CMAKE_COMMAND}

ARGS ${CG_CMAKE_SCRIPT_ARGS}

DEPENDS ${CG_RESOURCES_DEPENDENCIES}

WORKING_DIRECTORY ${CMAKE_CURRENT_BINARY_DIR}

COMMENT ${CG_XML_CUSTOM_COMMAND_COMMENT})

# Create target manually if not set (to make sure glib-compile-resources

# doesn't change behaviour with it's naming standards).

if (NOT CG_ARG_TARGET)

set(CG_ARG_TARGET "${CMAKE_CURRENT_BINARY_DIR}/resources")

set(CG_ARG_TARGET "${CG_ARG_TARGET}.${CG_TARGET_FILE_ENDING}")

endif()

# Create source directory automatically if not set.

if (NOT CG_ARG_SOURCE_DIR)

set(CG_ARG_SOURCE_DIR "${CMAKE_SOURCE_DIR}")

endif()

# Add compilation target for resources.

add_custom_command(OUTPUT ${CG_ARG_TARGET}

COMMAND ${GLIB_COMPILE_RESOURCES_EXECUTABLE}

ARGS

${OPTIONS}

"--target=${Q}${CG_ARG_TARGET}${Q}"

"--sourcedir=${Q}${CG_ARG_SOURCE_DIR}${Q}"

${CG_GENERATE_COMMAND_LINE}

${CG_XML_FILE_PATH}

MAIN_DEPENDENCY ${CG_XML_FILE_PATH}

DEPENDS ${CG_RESOURCES_DEPENDENCIES}

WORKING_DIRECTORY ${CMAKE_BUILD_DIR})

# Set output and XML_OUT to parent scope.

set(${xml_out} ${CG_XML_FILE_PATH} PARENT_SCOPE)

set(${output} ${CG_ARG_TARGET} PARENT_SCOPE)

endfunction()

��������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������dino-0.1.0/cmake/ComputeVersion.cmake���������������������������������������������������������������0000644�0000000�0000000�00000007537�13614354364�016230� 0����������������������������������������������������������������������������������������������������ustar �root����������������������������root�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������include(CMakeParseArguments)

function(_compute_version_from_file)

set_property(DIRECTORY APPEND PROPERTY CMAKE_CONFIGURE_DEPENDS ${CMAKE_SOURCE_DIR}/VERSION)

if (NOT EXISTS ${CMAKE_SOURCE_DIR}/VERSION)

set(VERSION_FOUND 0 PARENT_SCOPE)

return()

endif ()

file(STRINGS ${CMAKE_SOURCE_DIR}/VERSION VERSION_FILE)

string(REPLACE " " ";" VERSION_FILE "${VERSION_FILE}")

cmake_parse_arguments(VERSION_FILE "" "RELEASE;PRERELEASE" "" ${VERSION_FILE})

if (DEFINED VERSION_FILE_RELEASE)

string(STRIP "${VERSION_FILE_RELEASE}" VERSION_FILE_RELEASE)

set(VERSION_IS_RELEASE 1 PARENT_SCOPE)

set(VERSION_FULL "${VERSION_FILE_RELEASE}" PARENT_SCOPE)

set(VERSION_FOUND 1 PARENT_SCOPE)

elseif (DEFINED VERSION_FILE_PRERELEASE)

string(STRIP "${VERSION_FILE_PRERELEASE}" VERSION_FILE_PRERELEASE)

set(VERSION_IS_RELEASE 0 PARENT_SCOPE)

set(VERSION_FULL "${VERSION_FILE_PRERELEASE}" PARENT_SCOPE)

set(VERSION_FOUND 1 PARENT_SCOPE)

else ()

set(VERSION_FOUND 0 PARENT_SCOPE)

endif ()

endfunction(_compute_version_from_file)

function(_compute_version_from_git)

set_property(DIRECTORY APPEND PROPERTY CMAKE_CONFIGURE_DEPENDS ${CMAKE_SOURCE_DIR}/.git)

if (NOT GIT_EXECUTABLE)

find_package(Git QUIET)

if (NOT GIT_FOUND)

return()

endif ()

endif (NOT GIT_EXECUTABLE)

# Git tag

execute_process(

COMMAND "${GIT_EXECUTABLE}" describe --tags --abbrev=0

WORKING_DIRECTORY "${CMAKE_SOURCE_DIR}"

RESULT_VARIABLE git_result

OUTPUT_VARIABLE git_tag

ERROR_VARIABLE git_error

OUTPUT_STRIP_TRAILING_WHITESPACE

ERROR_STRIP_TRAILING_WHITESPACE

)

if (NOT git_result EQUAL 0)

return()

endif (NOT git_result EQUAL 0)

if (git_tag MATCHES "^v?([0-9]+[.]?[0-9]*[.]?[0-9]*)(-[.0-9A-Za-z-]+)?([+][.0-9A-Za-z-]+)?$")

set(VERSION_LAST_RELEASE "${CMAKE_MATCH_1}")

else ()

return()

endif ()

# Git describe

execute_process(

COMMAND "${GIT_EXECUTABLE}" describe --tags

WORKING_DIRECTORY "${CMAKE_SOURCE_DIR}"

RESULT_VARIABLE git_result

OUTPUT_VARIABLE git_describe

ERROR_VARIABLE git_error

OUTPUT_STRIP_TRAILING_WHITESPACE

ERROR_STRIP_TRAILING_WHITESPACE

)

if (NOT git_result EQUAL 0)

return()

endif (NOT git_result EQUAL 0)

if ("${git_tag}" STREQUAL "${git_describe}")

set(VERSION_IS_RELEASE 1)

else ()

set(VERSION_IS_RELEASE 0)

if (git_describe MATCHES "-([0-9]+)-g([0-9a-f]+)$")

set(VERSION_TAG_OFFSET "${CMAKE_MATCH_1}")

set(VERSION_COMMIT_HASH "${CMAKE_MATCH_2}")

endif ()

execute_process(

COMMAND "${GIT_EXECUTABLE}" show --format=%cd --date=format:%Y%m%d -s

WORKING_DIRECTORY "${CMAKE_SOURCE_DIR}"

RESULT_VARIABLE git_result

OUTPUT_VARIABLE git_time

ERROR_VARIABLE git_error

OUTPUT_STRIP_TRAILING_WHITESPACE

ERROR_STRIP_TRAILING_WHITESPACE

)

if (NOT git_result EQUAL 0)

return()

endif (NOT git_result EQUAL 0)

set(VERSION_COMMIT_DATE "${git_time}")

endif ()

if (NOT VERSION_IS_RELEASE)

set(VERSION_SUFFIX "~git${VERSION_TAG_OFFSET}.${VERSION_COMMIT_DATE}.${VERSION_COMMIT_HASH}")

else (NOT VERSION_IS_RELEASE)

set(VERSION_SUFFIX "")

endif (NOT VERSION_IS_RELEASE)

set(VERSION_IS_RELEASE ${VERSION_IS_RELEASE} PARENT_SCOPE)

set(VERSION_FULL "${VERSION_LAST_RELEASE}${VERSION_SUFFIX}" PARENT_SCOPE)

set(VERSION_FOUND 1 PARENT_SCOPE)

endfunction(_compute_version_from_git)

_compute_version_from_file()

if (NOT VERSION_FOUND)

_compute_version_from_git()

endif (NOT VERSION_FOUND)�����������������������������������������������������������������������������������������������������������������������������������������������������������������dino-0.1.0/cmake/FindATK.cmake����������������������������������������������������������������������0000644�0000000�0000000�00000002670�13614354364�014457� 0����������������������������������������������������������������������������������������������������ustar �root����������������������������root�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������include(PkgConfigWithFallback)

find_pkg_config_with_fallback(ATK

PKG_CONFIG_NAME atk

LIB_NAMES atk-1.0

INCLUDE_NAMES atk/atk.h

INCLUDE_DIR_SUFFIXES atk-1.0 atk-1.0/include

DEPENDS GObject

)

if(ATK_FOUND AND NOT ATK_VERSION)

find_file(ATK_VERSION_HEADER "atk/atkversion.h" HINTS ${ATK_INCLUDE_DIRS})

mark_as_advanced(ATK_VERSION_HEADER)

if(ATK_VERSION_HEADER)

file(STRINGS "${ATK_VERSION_HEADER}" ATK_MAJOR_VERSION REGEX "^#define ATK_MAJOR_VERSION +\\(?([0-9]+)\\)?$")

string(REGEX REPLACE "^#define ATK_MAJOR_VERSION \\(?([0-9]+)\\)?$" "\\1" ATK_MAJOR_VERSION "${ATK_MAJOR_VERSION}")

file(STRINGS "${ATK_VERSION_HEADER}" ATK_MINOR_VERSION REGEX "^#define ATK_MINOR_VERSION +\\(?([0-9]+)\\)?$")

string(REGEX REPLACE "^#define ATK_MINOR_VERSION \\(?([0-9]+)\\)?$" "\\1" ATK_MINOR_VERSION "${ATK_MINOR_VERSION}")

file(STRINGS "${ATK_VERSION_HEADER}" ATK_MICRO_VERSION REGEX "^#define ATK_MICRO_VERSION +\\(?([0-9]+)\\)?$")

string(REGEX REPLACE "^#define ATK_MICRO_VERSION \\(?([0-9]+)\\)?$" "\\1" ATK_MICRO_VERSION "${ATK_MICRO_VERSION}")

set(ATK_VERSION "${ATK_MAJOR_VERSION}.${ATK_MINOR_VERSION}.${ATK_MICRO_VERSION}")

unset(ATK_MAJOR_VERSION)

unset(ATK_MINOR_VERSION)

unset(ATK_MICRO_VERSION)

endif()

endif()

include(FindPackageHandleStandardArgs)

find_package_handle_standard_args(ATK

REQUIRED_VARS ATK_LIBRARY

VERSION_VAR ATK_VERSION)������������������������������������������������������������������������dino-0.1.0/cmake/FindCairo.cmake��������������������������������������������������������������������0000644�0000000�0000000�00000002743�13614354364�015076� 0����������������������������������������������������������������������������������������������������ustar �root����������������������������root�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������include(PkgConfigWithFallback)

find_pkg_config_with_fallback(Cairo

PKG_CONFIG_NAME cairo

LIB_NAMES cairo

INCLUDE_NAMES cairo.h

INCLUDE_DIR_SUFFIXES cairo cairo/include

)

if(Cairo_FOUND AND NOT Cairo_VERSION)

find_file(Cairo_VERSION_HEADER "cairo-version.h" HINTS ${Cairo_INCLUDE_DIRS})

mark_as_advanced(Cairo_VERSION_HEADER)

if(Cairo_VERSION_HEADER)

file(STRINGS "${Cairo_VERSION_HEADER}" Cairo_MAJOR_VERSION REGEX "^#define CAIRO_VERSION_MAJOR +\\(?([0-9]+)\\)?$")

string(REGEX REPLACE "^#define CAIRO_VERSION_MAJOR \\(?([0-9]+)\\)?$" "\\1" Cairo_MAJOR_VERSION "${Cairo_MAJOR_VERSION}")

file(STRINGS "${Cairo_VERSION_HEADER}" Cairo_MINOR_VERSION REGEX "^#define CAIRO_VERSION_MINOR +\\(?([0-9]+)\\)?$")

string(REGEX REPLACE "^#define CAIRO_VERSION_MINOR \\(?([0-9]+)\\)?$" "\\1" Cairo_MINOR_VERSION "${Cairo_MINOR_VERSION}")

file(STRINGS "${Cairo_VERSION_HEADER}" Cairo_MICRO_VERSION REGEX "^#define CAIRO_VERSION_MICRO +\\(?([0-9]+)\\)?$")

string(REGEX REPLACE "^#define CAIRO_VERSION_MICRO \\(?([0-9]+)\\)?$" "\\1" Cairo_MICRO_VERSION "${Cairo_MICRO_VERSION}")

set(Cairo_VERSION "${Cairo_MAJOR_VERSION}.${Cairo_MINOR_VERSION}.${Cairo_MICRO_VERSION}")

unset(Cairo_MAJOR_VERSION)

unset(Cairo_MINOR_VERSION)

unset(Cairo_MICRO_VERSION)

endif()

endif()

include(FindPackageHandleStandardArgs)

find_package_handle_standard_args(Cairo

REQUIRED_VARS Cairo_LIBRARY

VERSION_VAR Cairo_VERSION)�����������������������������dino-0.1.0/cmake/FindCanberra.cmake�����������������������������������������������������������������0000644�0000000�0000000�00000000423�13614354364�015547� 0����������������������������������������������������������������������������������������������������ustar �root����������������������������root�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������include(PkgConfigWithFallback)

find_pkg_config_with_fallback(Canberra

PKG_CONFIG_NAME libcanberra

LIB_NAMES canberra

INCLUDE_NAMES canberra.h

)

include(FindPackageHandleStandardArgs)

find_package_handle_standard_args(Canberra

REQUIRED_VARS Canberra_LIBRARY)

���������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������dino-0.1.0/cmake/FindGCrypt.cmake�������������������������������������������������������������������0000644�0000000�0000000�00000000466�13614354364�015251� 0����������������������������������������������������������������������������������������������������ustar �root����������������������������root�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������include(PkgConfigWithFallbackOnConfigScript)

find_pkg_config_with_fallback_on_config_script(GCrypt

PKG_CONFIG_NAME libgcrypt

CONFIG_SCRIPT_NAME libgcrypt

)

include(FindPackageHandleStandardArgs)

find_package_handle_standard_args(GCrypt

REQUIRED_VARS GCrypt_LIBRARY

VERSION_VAR GCrypt_VERSION)

����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������dino-0.1.0/cmake/FindGDK3.cmake���������������������������������������������������������������������0000644�0000000�0000000�00000003334�13614354364�014526� 0����������������������������������������������������������������������������������������������������ustar �root����������������������������root�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������include(PkgConfigWithFallback)

find_pkg_config_with_fallback(GDK3

PKG_CONFIG_NAME gdk-3.0

LIB_NAMES gdk-3

INCLUDE_NAMES gdk/gdk.h

INCLUDE_DIR_SUFFIXES gtk-3.0 gtk-3.0/include gtk+-3.0 gtk+-3.0/include

DEPENDS Pango Cairo GDKPixbuf2

)

if(GDK3_FOUND AND NOT GDK3_VERSION)

find_file(GDK3_VERSION_HEADER "gdk/gdkversionmacros.h" HINTS ${GDK3_INCLUDE_DIRS})

mark_as_advanced(GDK3_VERSION_HEADER)

if(GDK3_VERSION_HEADER)

file(STRINGS "${GDK3_VERSION_HEADER}" GDK3_MAJOR_VERSION REGEX "^#define GDK_MAJOR_VERSION +\\(?([0-9]+)\\)?$")

string(REGEX REPLACE "^#define GDK_MAJOR_VERSION \\(?([0-9]+)\\)?$" "\\1" GDK3_MAJOR_VERSION "${GDK3_MAJOR_VERSION}")

file(STRINGS "${GDK3_VERSION_HEADER}" GDK3_MINOR_VERSION REGEX "^#define GDK_MINOR_VERSION +\\(?([0-9]+)\\)?$")

string(REGEX REPLACE "^#define GDK_MINOR_VERSION \\(?([0-9]+)\\)?$" "\\1" GDK3_MINOR_VERSION "${GDK3_MINOR_VERSION}")