pax_global_header�����������������������������������������������������������������������������������0000666�0000000�0000000�00000000064�13164507017�0014516�g����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������52 comment=0b29a44a3114b6d0868fc5c116275452d910d72a

����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/�������������������������������������������������������������������������������0000775�0000000�0000000�00000000000�13164507017�0014370�5����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/.gitignore���������������������������������������������������������������������0000664�0000000�0000000�00000000007�13164507017�0016355�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������/venv/

�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/.travis.yml��������������������������������������������������������������������0000664�0000000�0000000�00000000200�13164507017�0016471�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������language: python

python:

- "2.7"

- "3.5"

- "nightly"

script:

- pip install -r requirements-dev.txt

- ./test.sh python

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/ChangeLog����������������������������������������������������������������������0000664�0000000�0000000�00000003771�13164507017�0016152�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������1.9.1

Handle files with CR characters better and add --strip-trailing-cr

1.9.0

Fix setup.py by symlinking icdiff to icdiff.py

1.8.2

Add short flags for --highlight (-H), --line-numbers (-N), and --whole-file (-W).

Fix use with bash process substitution and other special files

1.8.1

Updated remaining copy of unicode test file (b/1)

1.8.0

Updated unicode test file (input-3)

Allow testing installed version

Allow importing as a module

Minor deduplication tweak to git-icdiff

Add pip instructions to readme

Allow using --tabsize

Allow non-recursive directory diffing

1.7.6

Fixed copyright.

1.7.3

Fix git-icdiff to handle filenames with spaces as arguments.

1.7.2

Don't stop diffing recursively when encountering a binary file.

1.7.1

Don't treat files with identical (mode, size, mtime) as equal.

1.7.0

Add tests

1.6.4

Unbreak --recursive again

1.6.3

Stop setting LESS_IS_MORE with git-icdiff, fixing #33.

1.6.2

Add support for setting the output encoding and default to utf8

1.6.1

Unbreak --recursive

1.6.0

Add support for setting the encoding, and handle fullwidth chars

in python2

1.5.3

Support use as an svn difftool.

Support -U and -L, and allow but ignore -u.

1.5.2

Various pager improvements in git-icdiff.

1.5.1

Make --highlight and --no-bold play nice.

1.5.0

Pass arguments through to icdiff when using git-icdiff.

1.4.0

Use less with "git icdiff" by default.

1.3.2

Fix linewrapping with unicode.

1.3.1

1.3.0 was completely borked.

1.3.0

Use setup.py to support standard python installation.

1.2.2

Start printing output as soon as its ready instead of waiting for

the whole file to complete.

1.2.1

Space fullwidth characters properly when treating input as unicode.

1.2.0

Add --recursive to support diffing directory trees.

1.1.2

Flush stdout when done.

1.1.1

Don't print stack traces on Ctrl+C or when piping into something

that quits.

1.1.0

Add --no-bold option useful with the solarized colorscheme and for

people who just don't like bold.

1.0.0

First Release

�������icdiff-release-1.9.1/MANIFEST.in��������������������������������������������������������������������0000664�0000000�0000000�00000000022�13164507017�0016120�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������include README.md

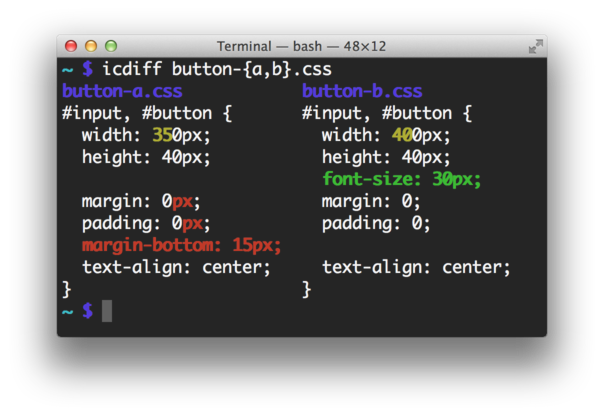

��������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/README.md����������������������������������������������������������������������0000664�0000000�0000000�00000006506�13164507017�0015656�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������# Icdiff

Improved colored diff

## Installation

Download the [latest](https://github.com/jeffkaufman/icdiff/releases) `icdiff` binary and put it on your PATH.

Alternatively, install with pip:

```

pip install git+https://github.com/jeffkaufman/icdiff.git

```

## Usage

```sh

icdiff [options] left_file right_file

```

Show differences between files in a two column view.

### Options

```

--version show program's version number and exit

-h, --help show this help message and exit

--cols=COLS specify the width of the screen. Autodetection is Unix

only

--encoding=ENCODING specify the file encoding; defaults to utf8

--head=HEAD consider only the first N lines of each file

-H, --highlight color by changing the background color instead of the

foreground color. Very fast, ugly, displays all

changes

-L LABELS, --label=LABELS

override file labels with arbitrary tags. Use twice,

one for each file

-N, --line-numbers generate output with line numbers

--no-bold use non-bold colors; recommended for with solarized

--no-headers don't label the left and right sides with their file

names

--output-encoding=OUTPUT_ENCODING

specify the output encoding; defaults to utf8

--recursive recursively compare subdirectories

--show-all-spaces color all non-matching whitespace including that which

is not needed for drawing the eye to changes. Slow,

ugly, displays all changes

--tabsize=TABSIZE tab stop spacing

-u, --patch generate patch. This is always true, and only exists

for compatibility

-U NUM, --unified=NUM, --numlines=NUM

how many lines of context to print; can't be combined

with --whole-file

-W, --whole-file show the whole file instead of just changed lines and

context

--strip-trailing-cr strip any trailing carriage return at the end of an

input line

```

## Using with git

To see what it looks like, try:

```sh

git difftool --extcmd icdiff

```

To install this as a tool you can use with git, copy

`git-icdiff` onto your path and run:

```sh

git icdiff

```

## Using with subversion

To try it out, run:

```sh

svn diff --diff-cmd icdiff

```

## Using with Mercurial

Add the following to your `~/.hgrc`:

```sh

[extensions]

extdiff=

[extdiff]

cmd.icdiff=icdiff

opts.icdiff=--recursive --line-numbers

```

Or check more [in-depth setup instructions](http://ianobermiller.com/blog/2016/07/14/side-by-side-diffs-for-mercurial-hg-icdiff-revisited/).

## Setting up a dev environment

Create a virtualenv and install the dev dependencies.

This is not needed for normal usage.

```sh

virtualenv venv

source venv/bin/activate

pip install -r requirements-dev.txt

```

## Running tests

```sh

./test.sh python2

./test.sh python3

```

## License

This file is derived from `difflib.HtmlDiff` which is under the [license](http://www.python.org/download/releases/2.6.2/license/).

I release my changes here under the same license. This is GPL compatible.

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/git-icdiff���������������������������������������������������������������������0000775�0000000�0000000�00000000453�13164507017�0016325�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������#!/bin/sh

GITPAGER=$(git config --get core.pager)

if [ -z "$GITPAGER" ]; then

GITPAGER="$PAGER"

fi

if [ -z "$GITPAGER" ]; then

GITPAGER="less"

fi

if [ "$GITPAGER" = "more" -o "$GITPAGER" = "less" ]; then

GITPAGER="$GITPAGER -R"

fi

git difftool --no-prompt --extcmd icdiff "$@" | $GITPAGER

���������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/icdiff�������������������������������������������������������������������������0000775�0000000�0000000�00000056622�13164507017�0015555�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������#!/usr/bin/env python

""" icdiff.py

Author: Jeff Kaufman, derived from difflib.HtmlDiff

License: This code is usable under the same open terms as the rest of

python. See: http://www.python.org/psf/license/

Copyright (c) 2001, 2002, 2003, 2004, 2005, 2006 Python Software Foundation;

All Rights Reserved

Based on Python's difflib.HtmlDiff,

with changes to provide console output instead of html output. """

import os

import sys

import errno

import difflib

from optparse import Option, OptionParser

import re

import filecmp

import unicodedata

import codecs

__version__ = "1.9.1"

color_codes = {

"red": '\033[0;31m',

"green": '\033[0;32m',

"yellow": '\033[0;33m',

"blue": '\033[0;34m',

"magenta": '\033[0;35m',

"cyan": '\033[0;36m',

"none": '\033[m',

"red_bold": '\033[1;31m',

"green_bold": '\033[1;32m',

"yellow_bold": '\033[1;33m',

"blue_bold": '\033[1;34m',

"magenta_bold": '\033[1;35m',

"cyan_bold": '\033[1;36m',

}

class ConsoleDiff(object):

"""Console colored side by side comparison with change highlights.

Based on difflib.HtmlDiff

This class can be used to create a text-mode table showing a side

by side, line by line comparison of text with inter-line and

intra-line change highlights in ansi color escape sequences as

intra-line change highlights in ansi color escape sequences as

read by xterm. The table can be generated in either full or

contextual difference mode.

To generate the table, call make_table.

Usage is the almost the same as HtmlDiff except only make_table is

implemented and the file can be invoked on the command line.

Run::

python icdiff.py --help

for command line usage information.

"""

def __init__(self, tabsize=8, wrapcolumn=None, linejunk=None,

charjunk=difflib.IS_CHARACTER_JUNK, cols=80,

line_numbers=False,

show_all_spaces=False,

highlight=False,

no_bold=False,

strip_trailing_cr=False):

"""ConsoleDiff instance initializer

Arguments:

tabsize -- tab stop spacing, defaults to 8.

wrapcolumn -- column number where lines are broken and wrapped,

defaults to None where lines are not wrapped.

linejunk, charjunk -- keyword arguments passed into ndiff() (used by

ConsoleDiff() to generate the side by side differences). See

ndiff() documentation for argument default values and descriptions.

"""

self._tabsize = tabsize

self.line_numbers = line_numbers

self.cols = cols

self.show_all_spaces = show_all_spaces

self.highlight = highlight

self.no_bold = no_bold

self.strip_trailing_cr = strip_trailing_cr

if wrapcolumn is None:

if not line_numbers:

wrapcolumn = self.cols // 2 - 2

else:

wrapcolumn = self.cols // 2 - 10

self._wrapcolumn = wrapcolumn

self._linejunk = linejunk

self._charjunk = charjunk

def _tab_newline_replace(self, fromlines, tolines):

"""Returns from/to line lists with tabs expanded and newlines removed.

Instead of tab characters being replaced by the number of spaces

needed to fill in to the next tab stop, this function will fill

the space with tab characters. This is done so that the difference

algorithms can identify changes in a file when tabs are replaced by

spaces and vice versa. At the end of the table generation, the tab

characters will be replaced with a space.

"""

def expand_tabs(line):

# hide real spaces

line = line.replace(' ', '\0')

# expand tabs into spaces

line = line.expandtabs(self._tabsize)

# replace spaces from expanded tabs back into tab characters

# (we'll replace them with markup after we do differencing)

line = line.replace(' ', '\t')

return line.replace('\0', ' ').rstrip('\n')

fromlines = [expand_tabs(line) for line in fromlines]

tolines = [expand_tabs(line) for line in tolines]

return fromlines, tolines

def _strip_trailing_cr(self, lines):

""" Remove windows return carriage

"""

lines = [line.rstrip('\r') for line in lines]

return lines

def _all_cr_nl(self, lines):

""" Whether a file is entirely \r\n line endings

"""

return all(line.endswith('\r') for line in lines)

def _display_len(self, s):

# Handle wide characters like Chinese.

def width(c):

if ((isinstance(c, type(u"")) and

unicodedata.east_asian_width(c) == 'W')):

return 2

elif c == '\r':

return 2

return 1

return sum(width(c) for c in s)

def _split_line(self, data_list, line_num, text):

"""Builds list of text lines by splitting text lines at wrap point

This function will determine if the input text line needs to be

wrapped (split) into separate lines. If so, the first wrap point

will be determined and the first line appended to the output

text line list. This function is used recursively to handle

the second part of the split line to further split it.

"""

# if blank line or context separator, just add it to the output list

if not line_num:

data_list.append((line_num, text))

return

# if line text doesn't need wrapping, just add it to the output list

if ((self._display_len(text) - (text.count('\0') * 3) <=

self._wrapcolumn)):

data_list.append((line_num, text))

return

# scan text looking for the wrap point, keeping track if the wrap

# point is inside markers

i = 0

n = 0

mark = ''

while n < self._wrapcolumn and i < len(text):

if text[i] == '\0':

i += 1

mark = text[i]

i += 1

elif text[i] == '\1':

i += 1

mark = ''

else:

n += self._display_len(text[i])

i += 1

# wrap point is inside text, break it up into separate lines

line1 = text[:i]

line2 = text[i:]

# if wrap point is inside markers, place end marker at end of first

# line and start marker at beginning of second line because each

# line will have its own table tag markup around it.

if mark:

line1 = line1 + '\1'

line2 = '\0' + mark + line2

# tack on first line onto the output list

data_list.append((line_num, line1))

# use this routine again to wrap the remaining text

self._split_line(data_list, '>', line2)

def _line_wrapper(self, diffs):

"""Returns iterator that splits (wraps) mdiff text lines"""

# pull from/to data and flags from mdiff iterator

for fromdata, todata, flag in diffs:

# check for context separators and pass them through

if flag is None:

yield fromdata, todata, flag

continue

(fromline, fromtext), (toline, totext) = fromdata, todata

# for each from/to line split it at the wrap column to form

# list of text lines.

fromlist, tolist = [], []

self._split_line(fromlist, fromline, fromtext)

self._split_line(tolist, toline, totext)

# yield from/to line in pairs inserting blank lines as

# necessary when one side has more wrapped lines

while fromlist or tolist:

if fromlist:

fromdata = fromlist.pop(0)

else:

fromdata = ('', ' ')

if tolist:

todata = tolist.pop(0)

else:

todata = ('', ' ')

yield fromdata, todata, flag

def _collect_lines(self, diffs):

"""Collects mdiff output into separate lists

Before storing the mdiff from/to data into a list, it is converted

into a single line of text with console markup.

"""

# pull from/to data and flags from mdiff style iterator

for fromdata, todata, flag in diffs:

if (fromdata, todata, flag) == (None, None, None):

yield None

else:

yield (self._format_line(*fromdata),

self._format_line(*todata))

def _format_line(self, linenum, text):

text = text.rstrip()

if not self.line_numbers:

return text

return self._add_line_numbers(linenum, text)

def _add_line_numbers(self, linenum, text):

try:

lid = '%d' % linenum

except TypeError:

# handle blank lines where linenum is '>' or ''

lid = ''

return text

return '%s %s' % (self._rpad(lid, 8), text)

def _real_len(self, s):

l = 0

in_esc = False

prev = ' '

for c in replace_all({'\0+': "",

'\0-': "",

'\0^': "",

'\1': "",

'\t': ' '}, s):

if in_esc:

if c == "m":

in_esc = False

else:

if c == "[" and prev == "\033":

in_esc = True

l -= 1 # we counted prev when we shouldn't have

else:

l += self._display_len(c)

prev = c

return l

def _rpad(self, s, field_width):

return self._pad(s, field_width) + s

def _pad(self, s, field_width):

return " " * (field_width - self._real_len(s))

def _lpad(self, s, field_width):

return s + self._pad(s, field_width)

def make_table(self, fromlines, tolines, fromdesc='', todesc='',

context=False, numlines=5):

"""Generates table of side by side comparison with change highlights

Arguments:

fromlines -- list of "from" lines

tolines -- list of "to" lines

fromdesc -- "from" file column header string

todesc -- "to" file column header string

context -- set to True for contextual differences (defaults to False

which shows full differences).

numlines -- number of context lines. When context is set True,

controls number of lines displayed before and after the change.

When context is False, controls the number of lines to place

the "next" link anchors before the next change (so click of

"next" link jumps to just before the change).

"""

if context:

context_lines = numlines

else:

context_lines = None

# change tabs to spaces before it gets more difficult after we insert

# markup

fromlines, tolines = self._tab_newline_replace(fromlines, tolines)

if self.strip_trailing_cr or (

self._all_cr_nl(fromlines) and self._all_cr_nl(tolines)):

fromlines = self._strip_trailing_cr(fromlines)

tolines = self._strip_trailing_cr(tolines)

# create diffs iterator which generates side by side from/to data

diffs = difflib._mdiff(fromlines, tolines, context_lines,

linejunk=self._linejunk,

charjunk=self._charjunk)

# set up iterator to wrap lines that exceed desired width

if self._wrapcolumn:

diffs = self._line_wrapper(diffs)

diffs = self._collect_lines(diffs)

for left, right in self._generate_table(fromdesc, todesc, diffs):

yield self.colorize(

"%s %s" % (self._lpad(left, self.cols // 2 - 1),

self._lpad(right, self.cols // 2 - 1)))

def _generate_table(self, fromdesc, todesc, diffs):

if fromdesc or todesc:

yield (simple_colorize(fromdesc, "blue"),

simple_colorize(todesc, "blue"))

for i, line in enumerate(diffs):

if line is None:

# mdiff yields None on separator lines; skip the bogus ones

# generated for the first line

if i > 0:

yield (simple_colorize('---', "blue"),

simple_colorize('---', "blue"))

else:

yield line

def colorize(self, s):

def background(color):

return replace_all({"\033[1;": "\033[7;",

"\033[0;": "\033[7;"}, color)

if self.no_bold:

C_ADD = color_codes["green"]

C_SUB = color_codes["red"]

C_CHG = color_codes["yellow"]

else:

C_ADD = color_codes["green_bold"]

C_SUB = color_codes["red_bold"]

C_CHG = color_codes["yellow_bold"]

if self.highlight:

C_ADD, C_SUB, C_CHG = (background(C_ADD),

background(C_SUB),

background(C_CHG))

C_NONE = color_codes["none"]

colors = (C_ADD, C_SUB, C_CHG, C_NONE)

s = replace_all({'\0+': C_ADD,

'\0-': C_SUB,

'\0^': C_CHG,

'\1': C_NONE,

'\t': ' ',

'\r': '\\r'}, s)

if self.highlight:

return s

if not self.show_all_spaces:

# If there's a change consisting entirely of whitespace,

# don't color it.

return re.sub("\033\\[[01];3([123])m(\\s+)(\033\\[)",

"\033[7;3\\1m\\2\\3", s)

def will_see_coloredspace(i, s):

while i < len(s) and s[i].isspace():

i += 1

if i < len(s) and s[i] == '\033':

return False

return True

n_s = []

in_color = False

seen_coloredspace = False

for i, c in enumerate(s):

if len(n_s) > 6 and n_s[-1] == "m":

ns_end = "".join(n_s[-7:])

for color in colors:

if ns_end.endswith(color):

if color != in_color:

seen_coloredspace = False

in_color = color

if ns_end.endswith(C_NONE):

in_color = False

if ((c.isspace() and in_color and

(self.show_all_spaces or not (seen_coloredspace or

will_see_coloredspace(i, s))))):

n_s.extend([C_NONE, background(in_color), c, C_NONE, in_color])

else:

if in_color:

seen_coloredspace = True

n_s.append(c)

joined = "".join(n_s)

return joined

def simple_colorize(s, chosen_color):

return "%s%s%s" % (color_codes[chosen_color], s, color_codes["none"])

def replace_all(replacements, string):

for search, replace in replacements.items():

string = string.replace(search, replace)

return string

class MultipleOption(Option):

ACTIONS = Option.ACTIONS + ("extend",)

STORE_ACTIONS = Option.STORE_ACTIONS + ("extend",)

TYPED_ACTIONS = Option.TYPED_ACTIONS + ("extend",)

ALWAYS_TYPED_ACTIONS = Option.ALWAYS_TYPED_ACTIONS + ("extend",)

def take_action(self, action, dest, opt, value, values, parser):

if action == "extend":

values.ensure_value(dest, []).append(value)

else:

Option.take_action(self, action, dest, opt, value, values, parser)

def create_option_parser():

# If you change any of these, also update README.

parser = OptionParser(usage="usage: %prog [options] left_file right_file",

version="icdiff version %s" % __version__,

description="Show differences between files in a "

"two column view.",

option_class=MultipleOption)

parser.add_option("--cols", default=None,

help="specify the width of the screen. Autodetection is "

"Unix only")

parser.add_option("--encoding", default="utf-8",

help="specify the file encoding; defaults to utf8")

parser.add_option("--head", default=0,

help="consider only the first N lines of each file")

parser.add_option("-H", "--highlight", default=False,

action="store_true",

help="color by changing the background color instead of "

"the foreground color. Very fast, ugly, displays all "

"changes")

parser.add_option("-L", "--label",

action="extend",

type="string",

dest='labels',

help="override file labels with arbitrary tags. "

"Use twice, one for each file")

parser.add_option("-N", "--line-numbers", default=False,

action="store_true",

help="generate output with line numbers")

parser.add_option("--no-bold", default=False,

action="store_true",

help="use non-bold colors; recommended for solarized")

parser.add_option("--no-headers", default=False,

action="store_true",

help="don't label the left and right sides "

"with their file names")

parser.add_option("--output-encoding", default="utf-8",

help="specify the output encoding; defaults to utf8")

parser.add_option("-r", "--recursive", default=False,

action="store_true",

help="recursively compare subdirectories")

parser.add_option("--show-all-spaces", default=False,

action="store_true",

help="color all non-matching whitespace including "

"that which is not needed for drawing the eye to "

"changes. Slow, ugly, displays all changes")

parser.add_option("--tabsize", default=8,

help="tab stop spacing")

parser.add_option("-u", "--patch", default=True,

action="store_true",

help="generate patch. This is always true, "

"and only exists for compatibility")

parser.add_option("-U", "--unified", "--numlines", default=5,

metavar="NUM",

help="how many lines of context to print; "

"can't be combined with --whole-file")

parser.add_option("-W", "--whole-file", default=False,

action="store_true",

help="show the whole file instead of just changed "

"lines and context")

parser.add_option("--strip-trailing-cr", default=False,

action="store_true",

help="strip any trailing carriage return at the end of "

"an input line")

return parser

def set_cols_option(options):

if not options.cols:

def ioctl_GWINSZ(fd):

try:

import fcntl

import termios

import struct

cr = struct.unpack('hh', fcntl.ioctl(fd, termios.TIOCGWINSZ,

'1234'))

except Exception:

return None

return cr

cr = ioctl_GWINSZ(0) or ioctl_GWINSZ(1) or ioctl_GWINSZ(2)

if cr:

options.cols = cr[1]

else:

options.cols = 80

return options

def validate_has_two_arguments(parser, args):

if len(args) != 2:

parser.print_help()

sys.exit()

def start():

parser = create_option_parser()

options, args = parser.parse_args()

validate_has_two_arguments(parser, args)

options = set_cols_option(options)

diff(options, *args)

def codec_print(s, options):

s = "%s\n" % s

if hasattr(sys.stdout, "buffer"):

sys.stdout.buffer.write(s.encode(options.output_encoding))

else:

sys.stdout.write(s.encode(options.output_encoding))

def diff(options, a, b):

def print_meta(s):

codec_print(simple_colorize(s, "magenta"), options)

# Don't use os.path.isfile; it returns False for file-like entities like

# bash's process substitution (/dev/fd/N).

is_a_file = not os.path.isdir(a)

is_b_file = not os.path.isdir(b)

if is_a_file and is_b_file:

try:

if not filecmp.cmp(a, b, shallow=False):

diff_files(options, a, b)

except OSError as e:

if e.errno == errno.ENOENT:

print_meta("error: file '%s' was not found" % e.filename)

else:

raise(e)

elif not is_a_file and not is_b_file:

a_contents = set(os.listdir(a))

b_contents = set(os.listdir(b))

for child in sorted(a_contents.union(b_contents)):

if child not in b_contents:

print_meta("Only in %s: %s" % (a, child))

elif child not in a_contents:

print_meta("Only in %s: %s" % (b, child))

elif options.recursive:

diff(options,

os.path.join(a, child),

os.path.join(b, child))

elif not is_a_file and is_b_file:

print_meta("File %s is a directory while %s is a file" % (a, b))

elif is_a_file and not is_b_file:

print_meta("File %s is a file while %s is a directory" % (a, b))

def read_file(fname, options):

try:

with codecs.open(fname, encoding=options.encoding, mode="rb") as inf:

return inf.readlines()

except UnicodeDecodeError as e:

codec_print(

"error: file '%s' not valid with encoding '%s': <%s> at %s-%s." %

(fname, options.encoding, e.reason, e.start, e.end), options)

raise

def diff_files(options, a, b):

if options.labels:

if len(options.labels) == 2:

headers = options.labels

else:

codec_print("error: to use arbitrary file labels, "

"specify -L twice.", options)

return

else:

headers = a, b

if options.no_headers:

headers = None, None

head = int(options.head)

assert not os.path.isdir(a)

assert not os.path.isdir(b)

try:

lines_a = read_file(a, options)

lines_b = read_file(b, options)

except UnicodeDecodeError:

return

if head != 0:

lines_a = lines_a[:head]

lines_b = lines_b[:head]

cd = ConsoleDiff(cols=int(options.cols),

show_all_spaces=options.show_all_spaces,

highlight=options.highlight,

no_bold=options.no_bold,

line_numbers=options.line_numbers,

tabsize=int(options.tabsize),

strip_trailing_cr=options.strip_trailing_cr)

for line in cd.make_table(

lines_a, lines_b, headers[0], headers[1],

context=(not options.whole_file),

numlines=int(options.unified)):

codec_print(line, options)

sys.stdout.flush()

if __name__ == "__main__":

try:

start()

except KeyboardInterrupt:

pass

except IOError as e:

if e.errno == errno.EPIPE:

pass

else:

raise

��������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/icdiff.py����������������������������������������������������������������������0000777�0000000�0000000�00000000000�13164507017�0017330�2icdiff����������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/requirements-dev.txt�����������������������������������������������������������0000664�0000000�0000000�00000000066�13164507017�0020432�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������flake8==2.4.0

mccabe==0.3

pep8==1.5.7

pyflakes==0.8.1

��������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/setup.py�����������������������������������������������������������������������0000664�0000000�0000000�00000001004�13164507017�0016075�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������from distutils.util import convert_path

from setuptools import setup, find_packages

from icdiff import __version__

setup(

name="icdiff",

version=__version__,

url="http://www.jefftk.com/icdiff",

author="Jeff Kaufman",

author_email="jeff@jefftk.com",

description="improved colored diff",

long_description=open('README.md').read(),

scripts=['git-icdiff'],

py_modules=['icdiff'],

entry_points={

'console_scripts': [

'icdiff=icdiff:start',

],

},

)

����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/test.sh������������������������������������������������������������������������0000775�0000000�0000000�00000011045�13164507017�0015707�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������#!/bin/bash

# Usage: ./test.sh [--regold] [test-name] [python-version]

# Example:

# Run all tests:

# ./test.sh python3

# Regold all tests:

# ./test.sh --regold python3

# Run one test:

# ./test.sh tests/gold-45-sas-h-nb.txt python3

# Regold one test:

# ./test.sh --regold tests/gold-45-sas-h-nb.txt python3

if [ "$#" -gt 1 -a "$1" = "--regold" ]; then

REGOLD=true

shift

else

REGOLD=false

fi

TEST_NAME=all

if [ "$#" -gt 1 ]; then

TEST_NAME=$1

shift

fi

if [ "$#" != 1 ]; then

echo "Usage: '$0 [--regold] [test-name] python[23]'"

exit 1

fi

PYTHON="$1"

ICDIFF="icdiff"

function fail() {

echo "FAIL"

exit 1

}

function check_gold() {

local gold=tests/$1

shift

if [ $TEST_NAME != "all" -a $TEST_NAME != $gold ]; then

return

fi

echo " check_gold $gold matches $@"

local tmp=/tmp/icdiff.output

if [ ! -z "$INSTALLED" ]; then

"$ICDIFF" "$@" &> $tmp

else

"$PYTHON" "$ICDIFF" "$@" &> $tmp

fi

if $REGOLD; then

if diff $tmp $gold > /dev/null; then

echo "Did not need to regold $gold"

else

cat $tmp

read -p "Is this correct? y/n > " -n 1 -r

echo

if [[ $REPLY =~ ^[Yy]$ ]]; then

mv $tmp $gold

echo "Regolded $gold."

else

echo "Did not regold $gold."

fi

fi

return

fi

if ! diff $gold $tmp; then

echo "Got: ($tmp)"

cat $tmp

echo "Expected: ($gold)"

cat $gold

fail

fi

}

check_gold gold-recursive.txt --recursive tests/{a,b} --cols=80

check_gold gold-dir.txt tests/{a,b} --cols=80

check_gold gold-12.txt tests/input-{1,2}.txt --cols=80

check_gold gold-3.txt tests/input-{3,3}.txt

check_gold gold-45.txt tests/input-{4,5}.txt --cols=80

check_gold gold-45-95.txt tests/input-{4,5}.txt --cols=95

check_gold gold-45-sas.txt tests/input-{4,5}.txt --cols=80 --show-all-spaces

check_gold gold-45-h.txt tests/input-{4,5}.txt --cols=80 --highlight

check_gold gold-45-nb.txt tests/input-{4,5}.txt --cols=80 --no-bold

check_gold gold-45-sas-h.txt tests/input-{4,5}.txt --cols=80 --show-all-spaces --highlight

check_gold gold-45-sas-h-nb.txt tests/input-{4,5}.txt --cols=80 --show-all-spaces --highlight --no-bold

check_gold gold-45-h-nb.txt tests/input-{4,5}.txt --cols=80 --highlight --no-bold

check_gold gold-45-ln.txt tests/input-{4,5}.txt --cols=80 --line-numbers

check_gold gold-45-nh.txt tests/input-{4,5}.txt --cols=80 --no-headers

check_gold gold-45-h3.txt tests/input-{4,5}.txt --cols=80 --head=3

check_gold gold-45-l.txt tests/input-{4,5}.txt --cols=80 -L left

check_gold gold-45-lr.txt tests/input-{4,5}.txt --cols=80 -L left -L right

check_gold gold-45-pipe.txt tests/input-4.txt <(cat tests/input-5.txt) --cols=80 --no-headers

check_gold gold-4dn.txt tests/input-4.txt /dev/null --cols=80 -L left -L right

check_gold gold-dn5.txt /dev/null tests/input-5.txt --cols=80 -L left -L right

check_gold gold-67.txt tests/input-{6,7}.txt --cols=80

check_gold gold-67-wf.txt tests/input-{6,7}.txt --cols=80 --whole-file

check_gold gold-67-ln.txt tests/input-{6,7}.txt --cols=80 --line-numbers

check_gold gold-67-u3.txt tests/input-{6,7}.txt --cols=80 -U 3

check_gold gold-tabs-default.txt tests/input-{8,9}.txt --cols=80

check_gold gold-tabs-4.txt tests/input-{8,9}.txt --cols=80 --tabsize=4

check_gold gold-file-not-found.txt tests/input-4.txt nonexistent_file

check_gold gold-strip-cr-off.txt tests/input-4.txt tests/input-4-cr.txt --cols=80

check_gold gold-strip-cr-on.txt tests/input-4.txt tests/input-4-cr.txt --cols=80 --strip-trailing-cr

check_gold gold-no-cr-indent tests/input-4-cr.txt tests/input-4-partial-cr.txt --cols=80

check_gold gold-hide-cr-if-dos tests/input-4-cr.txt tests/input-5-cr.txt --cols=80

if [ ! -z "$INSTALLED" ]; then

VERSION=$(icdiff --version | awk '{print $NF}')

else

VERSION=$(./icdiff --version | awk '{print $NF}')

fi

if [ "$VERSION" != $(head -n 1 ChangeLog) ]; then

echo "Version mismatch between ChangeLog and icdiff source."

fail

fi

if ! command -v 'flake8' >/dev/null 2>&1; then

echo 'Could not find flake8. Ensure flake8 is installed and on your $PATH.'

if [ -z "$VIRTUAL_ENV" ]; then

echo 'It appears you have have forgotten to activate your virtualenv.'

fi

echo 'See README.md for details on setting up your environment.'

fail

fi

echo 'Running flake8 linter...'

if ! flake8 icdiff; then

fail

fi

if ! $REGOLD; then

echo PASS

fi

�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/�������������������������������������������������������������������������0000775�0000000�0000000�00000000000�13164507017�0015532�5����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/a/�����������������������������������������������������������������������0000775�0000000�0000000�00000000000�13164507017�0015752�5����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/a/1����������������������������������������������������������������������0000664�0000000�0000000�00000000000�13164507017�0016023�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/a/c/���������������������������������������������������������������������0000775�0000000�0000000�00000000000�13164507017�0016174�5����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/a/c/e��������������������������������������������������������������������0000664�0000000�0000000�00000000002�13164507017�0016333�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������1

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/a/c/f��������������������������������������������������������������������0000664�0000000�0000000�00000000002�13164507017�0016334�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������2

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/a/j����������������������������������������������������������������������0000664�0000000�0000000�00000000002�13164507017�0016116�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������7

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/b/�����������������������������������������������������������������������0000775�0000000�0000000�00000000000�13164507017�0015753�5����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/b/1����������������������������������������������������������������������0000664�0000000�0000000�00000054375�13164507017�0016054�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������UTF-8 decoder capability and stress test

----------------------------------------

Markus Kuhn - 2015-08-28 - CC BY 4.0

This test file can help you examine, how your UTF-8 decoder handles

various types of correct, malformed, or otherwise interesting UTF-8

sequences. This file is not meant to be a conformance test. It does

not prescribe any particular outcome. Therefore, there is no way to

"pass" or "fail" this test file, even though the text does suggest a

preferable decoder behaviour at some places. Its aim is, instead, to

help you think about, and test, the behaviour of your UTF-8 decoder on a

systematic collection of unusual inputs. Experience so far suggests

that most first-time authors of UTF-8 decoders find at least one

serious problem in their decoder using this file.

The test lines below cover boundary conditions, malformed UTF-8

sequences, as well as correctly encoded UTF-8 sequences of Unicode code

points that should never occur in a correct UTF-8 file.

According to ISO 10646-1:2000, sections D.7 and 2.3c, a device

receiving UTF-8 shall interpret a "malformed sequence in the same way

that it interprets a character that is outside the adopted subset" and

"characters that are not within the adopted subset shall be indicated

to the user" by a receiving device. One commonly used approach in

UTF-8 decoders is to replace any malformed UTF-8 sequence by a

replacement character (U+FFFD), which looks a bit like an inverted

question mark, or a similar symbol. It might be a good idea to

visually distinguish a malformed UTF-8 sequence from a correctly

encoded Unicode character that is just not available in the current

font but otherwise fully legal, even though ISO 10646-1 doesn't

mandate this. In any case, just ignoring malformed sequences or

unavailable characters does not conform to ISO 10646, will make

debugging more difficult, and can lead to user confusion.

Please check, whether a malformed UTF-8 sequence is (1) represented at

all, (2) represented by exactly one single replacement character (or

equivalent signal), and (3) the following quotation mark after an

illegal UTF-8 sequence is correctly displayed, i.e. proper

resynchronization takes place immediately after any malformed

sequence. This file says "THE END" in the last line, so if you don't

see that, your decoder crashed somehow before, which should always be

cause for concern.

All lines in this file are exactly 79 characters long (plus the line

feed). In addition, all lines end with "|", except for the two test

lines 2.1.1 and 2.2.1, which contain non-printable ASCII controls

U+0000 and U+007F. If you display this file with a fixed-width font,

these "|" characters should all line up in column 79 (right margin).

This allows you to test quickly, whether your UTF-8 decoder finds the

correct number of characters in every line, that is whether each

malformed sequences is replaced by a single replacement character.

Note that, as an alternative to the notion of malformed sequence used

here, it is also a perfectly acceptable (and in some situations even

preferable) solution to represent each individual byte of a malformed

sequence with a replacement character. If you follow this strategy in

your decoder, then please ignore the "|" column.

Here come the tests: |

|

1 Some correct UTF-8 text |

|

You should see the Greek word 'kosme': "κόσμε" |

|

2 Boundary condition test cases |

|

2.1 First possible sequence of a certain length |

|

2.1.1 1 byte (U-00000000): "�"

2.1.2 2 bytes (U-00000080): "" |

2.1.3 3 bytes (U-00000800): "ࠀ" |

2.1.4 4 bytes (U-00010000): "𐀀" |

2.1.5 5 bytes (U-00200000): "�����" |

2.1.6 6 bytes (U-04000000): "������" |

|

2.2 Last possible sequence of a certain length |

|

2.2.1 1 byte (U-0000007F): ""

2.2.2 2 bytes (U-000007FF): "߿" |

2.2.3 3 bytes (U-0000FFFF): "" |

2.2.4 4 bytes (U-001FFFFF): "����" |

2.2.5 5 bytes (U-03FFFFFF): "�����" |

2.2.6 6 bytes (U-7FFFFFFF): "������" |

|

2.3 Other boundary conditions |

|

2.3.1 U-0000D7FF = ed 9f bf = "" |

2.3.2 U-0000E000 = ee 80 80 = "" |

2.3.3 U-0000FFFD = ef bf bd = "�" |

2.3.4 U-0010FFFF = f4 8f bf bf = "" |

2.3.5 U-00110000 = f4 90 80 80 = "����" |

|

3 Malformed sequences |

|

3.1 Unexpected continuation bytes |

|

Each unexpected continuation byte should be separately signalled as a |

malformed sequence of its own. |

|

3.1.1 First continuation byte 0x80: "�" |

3.1.2 Last continuation byte 0xbf: "�" |

|

3.1.3 2 continuation bytes: "��" |

3.1.4 3 continuation bytes: "���" |

3.1.5 4 continuation bytes: "����" |

3.1.6 5 continuation bytes: "�����" |

3.1.7 6 continuation bytes: "������" |

3.1.8 7 continuation bytes: "�������" |

|

3.1.9 Sequence of all 64 possible continuation bytes (0x80-0xbf): |

|

"���������������� |

���������������� |

���������������� |

����������������" |

|

3.2 Lonely start characters |

|

3.2.1 All 32 first bytes of 2-byte sequences (0xc0-0xdf), |

each followed by a space character: |

|

"� � � � � � � � � � � � � � � � |

� � � � � � � � � � � � � � � � " |

|

3.2.2 All 16 first bytes of 3-byte sequences (0xe0-0xef), |

each followed by a space character: |

|

"� � � � � � � � � � � � � � � � " |

|

3.2.3 All 8 first bytes of 4-byte sequences (0xf0-0xf7), |

each followed by a space character: |

|

"� � � � � � � � " |

|

3.2.4 All 4 first bytes of 5-byte sequences (0xf8-0xfb), |

each followed by a space character: |

|

"� � � � " |

|

3.2.5 All 2 first bytes of 6-byte sequences (0xfc-0xfd), |

each followed by a space character: |

|

"� � " |

|

3.3 Sequences with last continuation byte missing |

|

All bytes of an incomplete sequence should be signalled as a single |

malformed sequence, i.e., you should see only a single replacement |

character in each of the next 10 tests. (Characters as in section 2) |

|

3.3.1 2-byte sequence with last byte missing (U+0000): "�" |

3.3.2 3-byte sequence with last byte missing (U+0000): "��" |

3.3.3 4-byte sequence with last byte missing (U+0000): "���" |

3.3.4 5-byte sequence with last byte missing (U+0000): "����" |

3.3.5 6-byte sequence with last byte missing (U+0000): "�����" |

3.3.6 2-byte sequence with last byte missing (U-000007FF): "�" |

3.3.7 3-byte sequence with last byte missing (U-0000FFFF): "�" |

3.3.8 4-byte sequence with last byte missing (U-001FFFFF): "���" |

3.3.9 5-byte sequence with last byte missing (U-03FFFFFF): "����" |

3.3.10 6-byte sequence with last byte missing (U-7FFFFFFF): "�����" |

|

3.4 Concatenation of incomplete sequences |

|

All the 10 sequences of 3.3 concatenated, you should see 10 malformed |

sequences being signalled: |

|

"�����������������������������" |

|

3.5 Impossible bytes |

|

The following two bytes cannot appear in a correct UTF-8 string |

|

3.5.1 fe = "�" |

3.5.2 ff = "�" |

3.5.3 fe fe ff ff = "����" |

|

4 Overlong sequences |

|

The following sequences are not malformed according to the letter of |

the Unicode 2.0 standard. However, they are longer then necessary and |

a correct UTF-8 encoder is not allowed to produce them. A "safe UTF-8 |

decoder" should reject them just like malformed sequences for two |

reasons: (1) It helps to debug applications if overlong sequences are |

not treated as valid representations of characters, because this helps |

to spot problems more quickly. (2) Overlong sequences provide |

alternative representations of characters, that could maliciously be |

used to bypass filters that check only for ASCII characters. For |

instance, a 2-byte encoded line feed (LF) would not be caught by a |

line counter that counts only 0x0a bytes, but it would still be |

processed as a line feed by an unsafe UTF-8 decoder later in the |

pipeline. From a security point of view, ASCII compatibility of UTF-8 |

sequences means also, that ASCII characters are *only* allowed to be |

represented by ASCII bytes in the range 0x00-0x7f. To ensure this |

aspect of ASCII compatibility, use only "safe UTF-8 decoders" that |

reject overlong UTF-8 sequences for which a shorter encoding exists. |

|

4.1 Examples of an overlong ASCII character |

|

With a safe UTF-8 decoder, all of the following five overlong |

representations of the ASCII character slash ("/") should be rejected |

like a malformed UTF-8 sequence, for instance by substituting it with |

a replacement character. If you see a slash below, you do not have a |

safe UTF-8 decoder! |

|

4.1.1 U+002F = c0 af = "��" |

4.1.2 U+002F = e0 80 af = "���" |

4.1.3 U+002F = f0 80 80 af = "����" |

4.1.4 U+002F = f8 80 80 80 af = "�����" |

4.1.5 U+002F = fc 80 80 80 80 af = "������" |

|

4.2 Maximum overlong sequences |

|

Below you see the highest Unicode value that is still resulting in an |

overlong sequence if represented with the given number of bytes. This |

is a boundary test for safe UTF-8 decoders. All five characters should |

be rejected like malformed UTF-8 sequences. |

|

4.2.1 U-0000007F = c1 bf = "��" |

4.2.2 U-000007FF = e0 9f bf = "���" |

4.2.3 U-0000FFFF = f0 8f bf bf = "����" |

4.2.4 U-001FFFFF = f8 87 bf bf bf = "�����" |

4.2.5 U-03FFFFFF = fc 83 bf bf bf bf = "������" |

|

4.3 Overlong representation of the NUL character |

|

The following five sequences should also be rejected like malformed |

UTF-8 sequences and should not be treated like the ASCII NUL |

character. |

|

4.3.1 U+0000 = c0 80 = "��" |

4.3.2 U+0000 = e0 80 80 = "���" |

4.3.3 U+0000 = f0 80 80 80 = "����" |

4.3.4 U+0000 = f8 80 80 80 80 = "�����" |

4.3.5 U+0000 = fc 80 80 80 80 80 = "������" |

|

5 Illegal code positions |

|

The following UTF-8 sequences should be rejected like malformed |

sequences, because they never represent valid ISO 10646 characters and |

a UTF-8 decoder that accepts them might introduce security problems |

comparable to overlong UTF-8 sequences. |

|

5.1 Single UTF-16 surrogates |

|

5.1.1 U+D800 = ed a0 80 = "���" |

5.1.2 U+DB7F = ed ad bf = "���" |

5.1.3 U+DB80 = ed ae 80 = "���" |

5.1.4 U+DBFF = ed af bf = "���" |

5.1.5 U+DC00 = ed b0 80 = "���" |

5.1.6 U+DF80 = ed be 80 = "���" |

5.1.7 U+DFFF = ed bf bf = "���" |

|

5.2 Paired UTF-16 surrogates |

|

5.2.1 U+D800 U+DC00 = ed a0 80 ed b0 80 = "������" |

5.2.2 U+D800 U+DFFF = ed a0 80 ed bf bf = "������" |

5.2.3 U+DB7F U+DC00 = ed ad bf ed b0 80 = "������" |

5.2.4 U+DB7F U+DFFF = ed ad bf ed bf bf = "������" |

5.2.5 U+DB80 U+DC00 = ed ae 80 ed b0 80 = "������" |

5.2.6 U+DB80 U+DFFF = ed ae 80 ed bf bf = "������" |

5.2.7 U+DBFF U+DC00 = ed af bf ed b0 80 = "������" |

5.2.8 U+DBFF U+DFFF = ed af bf ed bf bf = "������" |

|

5.3 Noncharacter code positions |

|

The following "noncharacters" are "reserved for internal use" by |

applications, and according to older versions of the Unicode Standard |

"should never be interchanged". Unicode Corrigendum #9 dropped the |

latter restriction. Nevertheless, their presence in incoming UTF-8 data |

can remain a potential security risk, depending on what use is made of |

these codes subsequently. Examples of such internal use: |

|

- Some file APIs with 16-bit characters may use the integer value -1 |

= U+FFFF to signal an end-of-file (EOF) or error condition. |

|

- In some UTF-16 receivers, code point U+FFFE might trigger a |

byte-swap operation (to convert between UTF-16LE and UTF-16BE). |

|

With such internal use of noncharacters, it may be desirable and safer |

to block those code points in UTF-8 decoders, as they should never |

occur legitimately in incoming UTF-8 data, and could trigger unsafe |

behaviour in subsequent processing. |

|

Particularly problematic noncharacters in 16-bit applications: |

|

5.3.1 U+FFFE = ef bf be = "" |

5.3.2 U+FFFF = ef bf bf = "" |

|

Other noncharacters: |

|

5.3.3 U+FDD0 .. U+FDEF = ""|

|

5.3.4 U+nFFFE U+nFFFF (for n = 1..10) |

|

" |

" |

|

THE END |

�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/b/c/���������������������������������������������������������������������0000775�0000000�0000000�00000000000�13164507017�0016175�5����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/b/c/e��������������������������������������������������������������������0000664�0000000�0000000�00000000002�13164507017�0016334�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������1

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/b/c/f��������������������������������������������������������������������0000664�0000000�0000000�00000000002�13164507017�0016335�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������3

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/b/c/g��������������������������������������������������������������������0000664�0000000�0000000�00000000002�13164507017�0016336�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������4

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/b/c/h��������������������������������������������������������������������0000664�0000000�0000000�00000000002�13164507017�0016337�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������5

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/b/d/���������������������������������������������������������������������0000775�0000000�0000000�00000000000�13164507017�0016176�5����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/b/d/q��������������������������������������������������������������������0000664�0000000�0000000�00000000002�13164507017�0016351�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������9

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/b/i����������������������������������������������������������������������0000664�0000000�0000000�00000000002�13164507017�0016116�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������6

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/gold-12.txt��������������������������������������������������������������0000664�0000000�0000000�00000001262�13164507017�0017441�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000�������������������������������������������������������������������������������������������������������������������������������������������������������������������������[0;34mtests/input-1.txt�[m �[0;34mtests/input-2.txt�[m

测试行a�[1;33mb�[mc测试测试行a�[1;33mb�[mc测试测试行a�[1;33mb�[mc测试 测试行a�[1;33md�[mc测试测试行a�[1;33md�[mc测试测试行a�[1;33md�[mc测试

测试行a�[1;33mb�[mc测试测试行a�[1;33mb�[mc测试测试行a�[1;33mb�[mc测试 测试行a�[1;33md�[mc测试测试行a�[1;33md�[mc测试测试行a�[1;33md�[mc测试

测试行a�[1;33mb�[mc测试测试行a�[1;33mb�[mc测试测试行a�[1;33mb�[mc测试 测试行a�[1;33md�[mc测试测试行a�[1;33md�[mc测试测试行a�[1;33md�[mc测试

����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/gold-3.txt���������������������������������������������������������������0000664�0000000�0000000�00000000000�13164507017�0017346�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/gold-45-95.txt�����������������������������������������������������������0000664�0000000�0000000�00000001774�13164507017�0017712�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000�������������������������������������������������������������������������������������������������������������������������������������������������������������������������[0;34mtests/input-4.txt�[m �[0;34mtests/input-5.txt�[m

#input, #button { #input, #button {

width: �[1;33m35�[m0px; width: �[1;33m40�[m0px;

height: 40px; height: 40px;

�[1;32m font-size: 30px;�[m

margin: 0�[1;31mpx�[m; margin: 0;

padding: 0�[1;31mpx�[m; padding: 0;

�[1;31m margin-bottom: 15px;�[m

text-align: center; text-align: center;

} }

����icdiff-release-1.9.1/tests/gold-45-h-nb.txt���������������������������������������������������������0000664�0000000�0000000�00000001560�13164507017�0020272�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000�������������������������������������������������������������������������������������������������������������������������������������������������������������������������[0;34mtests/input-4.txt�[m �[0;34mtests/input-5.txt�[m

#input, #button { #input, #button {

width: �[7;33m35�[m0px; width: �[7;33m40�[m0px;

height: 40px; height: 40px;

�[7;32m font-size: 30px;�[m

margin: 0�[7;31mpx�[m; margin: 0;

padding: 0�[7;31mpx�[m; padding: 0;

�[7;31m margin-bottom: 15px;�[m

text-align: center; text-align: center;

} }

������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/gold-45-h.txt������������������������������������������������������������0000664�0000000�0000000�00000001560�13164507017�0017675�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000�������������������������������������������������������������������������������������������������������������������������������������������������������������������������[0;34mtests/input-4.txt�[m �[0;34mtests/input-5.txt�[m

#input, #button { #input, #button {

width: �[7;33m35�[m0px; width: �[7;33m40�[m0px;

height: 40px; height: 40px;

�[7;32m font-size: 30px;�[m

margin: 0�[7;31mpx�[m; margin: 0;

padding: 0�[7;31mpx�[m; padding: 0;

�[7;31m margin-bottom: 15px;�[m

text-align: center; text-align: center;

} }

������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/gold-45-h3.txt�����������������������������������������������������������0000664�0000000�0000000�00000000550�13164507017�0017756�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000�������������������������������������������������������������������������������������������������������������������������������������������������������������������������[0;34mtests/input-4.txt�[m �[0;34mtests/input-5.txt�[m

#input, #button { #input, #button {

width: �[1;33m35�[m0px; width: �[1;33m40�[m0px;

height: 40px; height: 40px;

��������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/gold-45-l.txt������������������������������������������������������������0000664�0000000�0000000�00000000067�13164507017�0017702�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������error: to use arbitrary file labels, specify -L twice.

�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/gold-45-ln.txt�����������������������������������������������������������0000664�0000000�0000000�00000001560�13164507017�0020057�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000�������������������������������������������������������������������������������������������������������������������������������������������������������������������������[0;34mtests/input-4.txt�[m �[0;34mtests/input-5.txt�[m

1 #input, #button { 1 #input, #button {

2 width: �[1;33m35�[m0px; 2 width: �[1;33m40�[m0px;

3 height: 40px; 3 height: 40px;

4 �[1;32m font-size: 30px;�[m

4 margin: 0�[1;31mpx�[m; 5 margin: 0;

5 padding: 0�[1;31mpx�[m; 6 padding: 0;

6 �[1;31m margin-bottom: 15px;�[m

7 text-align: center; 7 text-align: center;

8 } 8 }

������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/gold-45-lr.txt�����������������������������������������������������������0000664�0000000�0000000�00000001560�13164507017�0020063�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000�������������������������������������������������������������������������������������������������������������������������������������������������������������������������[0;34mleft�[m �[0;34mright�[m

#input, #button { #input, #button {

width: �[1;33m35�[m0px; width: �[1;33m40�[m0px;

height: 40px; height: 40px;

�[1;32m font-size: 30px;�[m

margin: 0�[1;31mpx�[m; margin: 0;

padding: 0�[1;31mpx�[m; padding: 0;

�[1;31m margin-bottom: 15px;�[m

text-align: center; text-align: center;

} }

������������������������������������������������������������������������������������������������������������������������������������������������icdiff-release-1.9.1/tests/gold-45-nb.txt�����������������������������������������������������������0000664�0000000�0000000�00000001560�13164507017�0020045�0����������������������������������������������������������������������������������������������������ustar�00root����������������������������root����������������������������0000000�0000000�������������������������������������������������������������������������������������������������������������������������������������������������������������������������[0;34mtests/input-4.txt�[m �[0;34mtests/input-5.txt�[m