fastText-0.9.2/�������������������������������������������������������������������������������������0000755�0001750�0000176�00000000000�13651775021�012666� 5����������������������������������������������������������������������������������������������������ustar �kenhys��������������������������docker�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������fastText-0.9.2/get-wikimedia.sh���������������������������������������������������������������������0000755�0001750�0000176�00000006356�13651775021�015757� 0����������������������������������������������������������������������������������������������������ustar �kenhys��������������������������docker�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������#!/usr/bin/env bash

#

# Copyright (c) 2016-present, Facebook, Inc.

# All rights reserved.

#

# This source code is licensed under the MIT license found in the

# LICENSE file in the root directory of this source tree.

#

set -e

normalize_text() {

sed -e "s/’/'/g" -e "s/′/'/g" -e "s/''/ /g" -e "s/'/ ' /g" -e "s/“/\"/g" -e "s/”/\"/g" \

-e 's/"/ " /g' -e 's/\./ \. /g' -e 's/

/ /g' -e 's/, / , /g' -e 's/(/ ( /g' -e 's/)/ ) /g' -e 's/\!/ \! /g' \

-e 's/\?/ \? /g' -e 's/\;/ /g' -e 's/\:/ /g' -e 's/-/ - /g' -e 's/=/ /g' -e 's/=/ /g' -e 's/*/ /g' -e 's/|/ /g' \

-e 's/«/ /g' | tr 0-9 " "

}

export LANGUAGE=en_US.UTF-8

export LC_ALL=en_US.UTF-8

export LANG=en_US.UTF-8

NOW=$(date +"%Y%m%d")

ROOT="data/wikimedia/${NOW}"

mkdir -p "${ROOT}"

echo "Saving data in ""$ROOT"

read -r -p "Choose a language (e.g. en, bh, fr, etc.): " choice

LANG="$choice"

echo "Chosen language: ""$LANG"

read -r -p "Continue to download (WARNING: This might be big and can take a long time!)(y/n)? " choice

case "$choice" in

y|Y ) echo "Starting download...";;

n|N ) echo "Exiting";exit 1;;

* ) echo "Invalid answer";exit 1;;

esac

wget -c "https://dumps.wikimedia.org/""$LANG""wiki/latest/""${LANG}""wiki-latest-pages-articles.xml.bz2" -P "${ROOT}"

echo "Processing ""$ROOT"/"$LANG""wiki-latest-pages-articles.xml.bz2"

bzip2 -c -d "$ROOT"/"$LANG""wiki-latest-pages-articles.xml.bz2" | awk '{print tolower($0);}' | perl -e '

# Program to filter Wikipedia XML dumps to "clean" text consisting only of lowercase

# letters (a-z, converted from A-Z), and spaces (never consecutive)...

# All other characters are converted to spaces. Only text which normally appears.

# in the web browser is displayed. Tables are removed. Image captions are.

# preserved. Links are converted to normal text. Digits are spelled out.

# *** Modified to not spell digits or throw away non-ASCII characters ***

# Written by Matt Mahoney, June 10, 2006. This program is released to the public domain.

$/=">"; # input record separator

while (<>) {

if (/ ...

if (/#redirect/i) {$text=0;} # remove #REDIRECT

if ($text) {

# Remove any text not normally visible

if (/<\/text>/) {$text=0;}

s/<.*>//; # remove xml tags

s/&/&/g; # decode URL encoded chars

s/<//g;

s///g; # remove references ...

s/<[^>]*>//g; # remove xhtml tags

s/\[http:[^] ]*/[/g; # remove normal url, preserve visible text

s/\|thumb//ig; # remove images links, preserve caption

s/\|left//ig;

s/\|right//ig;

s/\|\d+px//ig;

s/\[\[image:[^\[\]]*\|//ig;

s/\[\[category:([^|\]]*)[^]]*\]\]/[[$1]]/ig; # show categories without markup

s/\[\[[a-z\-]*:[^\]]*\]\]//g; # remove links to other languages

s/\[\[[^\|\]]*\|/[[/g; # remove wiki url, preserve visible text

s/{{[^}]*}}//g; # remove {{icons}} and {tables}

s/{[^}]*}//g;

s/\[//g; # remove [ and ]

s/\]//g;

s/&[^;]*;/ /g; # remove URL encoded chars

$_=" $_ ";

chop;

print $_;

}

}

' | normalize_text | awk '{if (NF>1) print;}' | tr -s " " | shuf > "${ROOT}"/wiki."${LANG}".txt

����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������fastText-0.9.2/quantization-example.sh��������������������������������������������������������������0000755�0001750�0000176�00000003050�13651775021�017402� 0����������������������������������������������������������������������������������������������������ustar �kenhys��������������������������docker�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������myshuf() {

perl -MList::Util=shuffle -e 'print shuffle(<>);' "$@";

}

normalize_text() {

tr '[:upper:]' '[:lower:]' | sed -e 's/^/__label__/g' | \

sed -e "s/'/ ' /g" -e 's/"//g' -e 's/\./ \. /g' -e 's/

/ /g' \

-e 's/,/ , /g' -e 's/(/ ( /g' -e 's/)/ ) /g' -e 's/\!/ \! /g' \

-e 's/\?/ \? /g' -e 's/\;/ /g' -e 's/\:/ /g' | tr -s " " | myshuf

}

RESULTDIR=result

DATADIR=data

mkdir -p "${RESULTDIR}"

mkdir -p "${DATADIR}"

if [ ! -f "${DATADIR}/dbpedia.train" ]

then

wget -c "https://github.com/le-scientifique/torchDatasets/raw/master/dbpedia_csv.tar.gz" -O "${DATADIR}/dbpedia_csv.tar.gz"

tar -xzvf "${DATADIR}/dbpedia_csv.tar.gz" -C "${DATADIR}"

cat "${DATADIR}/dbpedia_csv/train.csv" | normalize_text > "${DATADIR}/dbpedia.train"

cat "${DATADIR}/dbpedia_csv/test.csv" | normalize_text > "${DATADIR}/dbpedia.test"

fi

make

echo "Training..."

./fasttext supervised -input "${DATADIR}/dbpedia.train" -output "${RESULTDIR}/dbpedia" -dim 10 -lr 0.1 -wordNgrams 2 -minCount 1 -bucket 10000000 -epoch 5 -thread 4

echo "Quantizing..."

./fasttext quantize -output "${RESULTDIR}/dbpedia" -input "${DATADIR}/dbpedia.train" -qnorm -retrain -epoch 1 -cutoff 100000

echo "Testing original model..."

./fasttext test "${RESULTDIR}/dbpedia.bin" "${DATADIR}/dbpedia.test"

echo "Testing quantized model..."

./fasttext test "${RESULTDIR}/dbpedia.ftz" "${DATADIR}/dbpedia.test"

wc -c < "${RESULTDIR}/dbpedia.bin" | awk '{print "Size of the original model:\t",$1;}'

wc -c < "${RESULTDIR}/dbpedia.ftz" | awk '{print "Size of the quantized model:\t",$1;}'

����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������fastText-0.9.2/word-vector-example.sh���������������������������������������������������������������0000755�0001750�0000176�00000002233�13651775021�017131� 0����������������������������������������������������������������������������������������������������ustar �kenhys��������������������������docker�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������#!/usr/bin/env bash

#

# Copyright (c) 2016-present, Facebook, Inc.

# All rights reserved.

#

# This source code is licensed under the MIT license found in the

# LICENSE file in the root directory of this source tree.

#

RESULTDIR=result

DATADIR=data

mkdir -p "${RESULTDIR}"

mkdir -p "${DATADIR}"

if [ ! -f "${DATADIR}/fil9" ]

then

wget -c http://mattmahoney.net/dc/enwik9.zip -P "${DATADIR}"

unzip "${DATADIR}/enwik9.zip" -d "${DATADIR}"

perl wikifil.pl "${DATADIR}/enwik9" > "${DATADIR}"/fil9

fi

if [ ! -f "${DATADIR}/rw/rw.txt" ]

then

wget -c https://nlp.stanford.edu/~lmthang/morphoNLM/rw.zip -P "${DATADIR}"

unzip "${DATADIR}/rw.zip" -d "${DATADIR}"

fi

make

./fasttext skipgram -input "${DATADIR}"/fil9 -output "${RESULTDIR}"/fil9 -lr 0.025 -dim 100 \

-ws 5 -epoch 1 -minCount 5 -neg 5 -loss ns -bucket 2000000 \

-minn 3 -maxn 6 -thread 4 -t 1e-4 -lrUpdateRate 100

cut -f 1,2 "${DATADIR}"/rw/rw.txt | awk '{print tolower($0)}' | tr '\t' '\n' > "${DATADIR}"/queries.txt

cat "${DATADIR}"/queries.txt | ./fasttext print-word-vectors "${RESULTDIR}"/fil9.bin > "${RESULTDIR}"/vectors.txt

python eval.py -m "${RESULTDIR}"/vectors.txt -d "${DATADIR}"/rw/rw.txt

���������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������fastText-0.9.2/tests/�������������������������������������������������������������������������������0000755�0001750�0000176�00000000000�13651775021�014030� 5����������������������������������������������������������������������������������������������������ustar �kenhys��������������������������docker�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������fastText-0.9.2/tests/fetch_test_data.sh�������������������������������������������������������������0000755�0001750�0000176�00000014630�13651775021�017514� 0����������������������������������������������������������������������������������������������������ustar �kenhys��������������������������docker�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������#!/usr/bin/env bash

#

# Copyright (c) 2016-present, Facebook, Inc.

# All rights reserved.

#

# This source code is licensed under the MIT license found in the

# LICENSE file in the root directory of this source tree.

#

DATADIR=${DATADIR:-data}

report_error() {

echo "Error on line $1 of $0"

}

myshuf() {

perl -MList::Util=shuffle -e 'print shuffle(<>);' "$@";

}

normalize_text() {

tr '[:upper:]' '[:lower:]' | sed -e 's/^/__label__/g' | \

sed -e "s/'/ ' /g" -e 's/"//g' -e 's/\./ \. /g' -e 's/

/ /g' \

-e 's/,/ , /g' -e 's/(/ ( /g' -e 's/)/ ) /g' -e 's/\!/ \! /g' \

-e 's/\?/ \? /g' -e 's/\;/ /g' -e 's/\:/ /g' | tr -s " " | myshuf

}

set -e

trap 'report_error $LINENO' ERR

mkdir -p "${DATADIR}"

# Unsupervised datasets

data_result="${DATADIR}/rw_queries.txt"

if [ ! -f "$data_result" ]

then

cut -f 1,2 "${DATADIR}"/rw/rw.txt | awk '{print tolower($0)}' | tr '\t' '\n' > "$data_result" || rm -f "$data_result"

fi

data_result="${DATADIR}/enwik9.zip"

if [ ! -f "$data_result" ] || \

[ $(md5sum "$data_result" | cut -f 1 -d ' ') != "3e773f8a1577fda2e27f871ca17f31fd" ]

then

wget -c http://mattmahoney.net/dc/enwik9.zip -P "${DATADIR}" || rm -f "$data_result"

unzip "$data_result" -d "${DATADIR}" || rm -f "$data_result"

fi

data_result="${DATADIR}/fil9"

if [ ! -f "$data_result" ]

then

perl wikifil.pl "${DATADIR}/enwik9" > "$data_result" || rm -f "$data_result"

fi

data_result="${DATADIR}/rw/rw.txt"

if [ ! -f "$data_result" ]

then

wget -c https://nlp.stanford.edu/~lmthang/morphoNLM/rw.zip -P "${DATADIR}"

unzip "${DATADIR}/rw.zip" -d "${DATADIR}" || rm -f "$data_result"

fi

# Supervised datasets

# Each datasets comes with a .train and a .test to measure performance

echo "Downloading dataset dbpedia"

data_result="${DATADIR}/dbpedia_csv.tar.gz"

if [ ! -f "$data_result" ] || \

[ $(md5sum "$data_result" | cut -f 1 -d ' ') != "8139d58cf075c7f70d085358e73af9b3" ]

then

wget -c "https://github.com/le-scientifique/torchDatasets/raw/master/dbpedia_csv.tar.gz" -O "$data_result"

tar -xzvf "$data_result" -C "${DATADIR}"

fi

data_result="${DATADIR}/dbpedia.train"

if [ ! -f "$data_result" ]

then

cat "${DATADIR}/dbpedia_csv/train.csv" | normalize_text > "$data_result" || rm -f "$data_result"

fi

data_result="${DATADIR}/dbpedia.test"

if [ ! -f "$data_result" ]

then

cat "${DATADIR}/dbpedia_csv/test.csv" | normalize_text > "$data_result" || rm -f "$data_result"

fi

echo "Downloading dataset tatoeba for langid"

data_result="${DATADIR}"/langid/all.txt

if [ ! -f "$data_result" ]

then

mkdir -p "${DATADIR}"/langid

wget http://downloads.tatoeba.org/exports/sentences.tar.bz2 -O "${DATADIR}"/langid/sentences.tar.bz2

tar xvfj "${DATADIR}"/langid/sentences.tar.bz2 --directory "${DATADIR}"/langid || exit 1

awk -F"\t" '{print"__label__"$2" "$3}' < "${DATADIR}"/langid/sentences.csv | shuf > "$data_result"

fi

data_result="${DATADIR}/langid.train"

if [ ! -f "$data_result" ]

then

tail -n +10001 "${DATADIR}"/langid/all.txt > "$data_result"

fi

data_result="${DATADIR}/langid.valid"

if [ ! -f "$data_result" ]

then

head -n 10000 "${DATADIR}"/langid/all.txt > "$data_result"

fi

echo "Downloading cooking dataset"

data_result="${DATADIR}"/cooking/cooking.stackexchange.txt

if [ ! -f "$data_result" ]

then

mkdir -p "${DATADIR}"/cooking/

wget https://dl.fbaipublicfiles.com/fasttext/data/cooking.stackexchange.tar.gz -O "${DATADIR}"/cooking/cooking.stackexchange.tar.gz

tar xvzf "${DATADIR}"/cooking/cooking.stackexchange.tar.gz --directory "${DATADIR}"/cooking || exit 1

cat "${DATADIR}"/cooking/cooking.stackexchange.txt | sed -e "s/\([.\!?,'/()]\)/ \1 /g" | tr "[:upper:]" "[:lower:]" > "${DATADIR}"/cooking/cooking.preprocessed.txt

fi

data_result="${DATADIR}"/cooking.train

if [ ! -f "$data_result" ]

then

head -n 12404 "${DATADIR}"/cooking/cooking.preprocessed.txt > "${DATADIR}"/cooking.train

fi

data_result="${DATADIR}"/cooking.valid

if [ ! -f "$data_result" ]

then

tail -n 3000 "${DATADIR}"/cooking/cooking.preprocessed.txt > "${DATADIR}"/cooking.valid

fi

echo "Checking for YFCC100M"

data_result="${DATADIR}"/YFCC100M/train

if [ ! -f "$data_result" ]

then

echo 'Download YFCC100M, unpack it and place train into the following path: '"$data_result"

echo 'You can download YFCC100M at :'"https://fasttext.cc/docs/en/dataset.html"

echo 'After you download this, run the script again'

exit 1

fi

data_result="${DATADIR}"/YFCC100M/test

if [ ! -f "$data_result" ]

then

echo 'Download YFCC100M, unpack it and place test into the following path: '"$data_result"

echo 'You can download YFCC100M at :'"https://fasttext.cc/docs/en/dataset.html"

echo 'After you download this, run the script again'

exit 1

fi

DATASET=(

ag_news

sogou_news

dbpedia

yelp_review_polarity

yelp_review_full

yahoo_answers

amazon_review_full

amazon_review_polarity

)

ID=(

0Bz8a_Dbh9QhbUDNpeUdjb0wxRms # ag_news

0Bz8a_Dbh9QhbUkVqNEszd0pHaFE # sogou_news

0Bz8a_Dbh9QhbQ2Vic1kxMmZZQ1k # dbpedia

0Bz8a_Dbh9QhbNUpYQ2N3SGlFaDg # yelp_review_polarity

0Bz8a_Dbh9QhbZlU4dXhHTFhZQU0 # yelp_review_full

0Bz8a_Dbh9Qhbd2JNdDBsQUdocVU # yahoo_answers

0Bz8a_Dbh9QhbZVhsUnRWRDhETzA # amazon_review_full

0Bz8a_Dbh9QhbaW12WVVZS2drcnM # amazon_review_polarity

)

# Small datasets first

for i in {0..0}

do

echo "Downloading dataset ${DATASET[i]}"

if [ ! -f "${DATADIR}/${DATASET[i]}.train" ]

then

wget -c "https://drive.google.com/uc?export=download&id=${ID[i]}" -O "${DATADIR}/${DATASET[i]}_csv.tar.gz"

tar -xzvf "${DATADIR}/${DATASET[i]}_csv.tar.gz" -C "${DATADIR}"

cat "${DATADIR}/${DATASET[i]}_csv/train.csv" | normalize_text > "${DATADIR}/${DATASET[i]}.train"

cat "${DATADIR}/${DATASET[i]}_csv/test.csv" | normalize_text > "${DATADIR}/${DATASET[i]}.test"

fi

done

# Large datasets require a bit more work due to the extra request page

for i in {1..7}

do

echo "Downloading dataset ${DATASET[i]}"

if [ ! -f "${DATADIR}/${DATASET[i]}.train" ]

then

curl -c /tmp/cookies "https://drive.google.com/uc?export=download&id=${ID[i]}" > /tmp/intermezzo.html

curl -L -b /tmp/cookies "https://drive.google.com$(cat /tmp/intermezzo.html | grep -Po 'uc-download-link" [^>]* href="\K[^"]*' | sed 's/\&/\&/g')" > "${DATADIR}/${DATASET[i]}_csv.tar.gz"

tar -xzvf "${DATADIR}/${DATASET[i]}_csv.tar.gz" -C "${DATADIR}"

cat "${DATADIR}/${DATASET[i]}_csv/train.csv" | normalize_text > "${DATADIR}/${DATASET[i]}.train"

cat "${DATADIR}/${DATASET[i]}_csv/test.csv" | normalize_text > "${DATADIR}/${DATASET[i]}.test"

fi

done

��������������������������������������������������������������������������������������������������������fastText-0.9.2/README.md����������������������������������������������������������������������������0000644�0001750�0000176�00000032454�13651775021�014155� 0����������������������������������������������������������������������������������������������������ustar �kenhys��������������������������docker�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������# fastText

[fastText](https://fasttext.cc/) is a library for efficient learning of word representations and sentence classification.

[](https://circleci.com/gh/facebookresearch/fastText/tree/master)

## Table of contents

* [Resources](#resources)

* [Models](#models)

* [Supplementary data](#supplementary-data)

* [FAQ](#faq)

* [Cheatsheet](#cheatsheet)

* [Requirements](#requirements)

* [Building fastText](#building-fasttext)

* [Getting the source code](#getting-the-source-code)

* [Building fastText using make (preferred)](#building-fasttext-using-make-preferred)

* [Building fastText using cmake](#building-fasttext-using-cmake)

* [Building fastText for Python](#building-fasttext-for-python)

* [Example use cases](#example-use-cases)

* [Word representation learning](#word-representation-learning)

* [Obtaining word vectors for out-of-vocabulary words](#obtaining-word-vectors-for-out-of-vocabulary-words)

* [Text classification](#text-classification)

* [Full documentation](#full-documentation)

* [References](#references)

* [Enriching Word Vectors with Subword Information](#enriching-word-vectors-with-subword-information)

* [Bag of Tricks for Efficient Text Classification](#bag-of-tricks-for-efficient-text-classification)

* [FastText.zip: Compressing text classification models](#fasttextzip-compressing-text-classification-models)

* [Join the fastText community](#join-the-fasttext-community)

* [License](#license)

## Resources

### Models

- Recent state-of-the-art [English word vectors](https://fasttext.cc/docs/en/english-vectors.html).

- Word vectors for [157 languages trained on Wikipedia and Crawl](https://github.com/facebookresearch/fastText/blob/master/docs/crawl-vectors.md).

- Models for [language identification](https://fasttext.cc/docs/en/language-identification.html#content) and [various supervised tasks](https://fasttext.cc/docs/en/supervised-models.html#content).

### Supplementary data

- The preprocessed [YFCC100M data](https://fasttext.cc/docs/en/dataset.html#content) used in [2].

### FAQ

You can find [answers to frequently asked questions](https://fasttext.cc/docs/en/faqs.html#content) on our [website](https://fasttext.cc/).

### Cheatsheet

We also provide a [cheatsheet](https://fasttext.cc/docs/en/cheatsheet.html#content) full of useful one-liners.

## Requirements

We are continuously building and testing our library, CLI and Python bindings under various docker images using [circleci](https://circleci.com/).

Generally, **fastText** builds on modern Mac OS and Linux distributions.

Since it uses some C++11 features, it requires a compiler with good C++11 support.

These include :

* (g++-4.7.2 or newer) or (clang-3.3 or newer)

Compilation is carried out using a Makefile, so you will need to have a working **make**.

If you want to use **cmake** you need at least version 2.8.9.

One of the oldest distributions we successfully built and tested the CLI under is [Debian jessie](https://www.debian.org/releases/jessie/).

For the word-similarity evaluation script you will need:

* Python 2.6 or newer

* NumPy & SciPy

For the python bindings (see the subdirectory python) you will need:

* Python version 2.7 or >=3.4

* NumPy & SciPy

* [pybind11](https://github.com/pybind/pybind11)

One of the oldest distributions we successfully built and tested the Python bindings under is [Debian jessie](https://www.debian.org/releases/jessie/).

If these requirements make it impossible for you to use fastText, please open an issue and we will try to accommodate you.

## Building fastText

We discuss building the latest stable version of fastText.

### Getting the source code

You can find our [latest stable release](https://github.com/facebookresearch/fastText/releases/latest) in the usual place.

There is also the master branch that contains all of our most recent work, but comes along with all the usual caveats of an unstable branch. You might want to use this if you are a developer or power-user.

### Building fastText using make (preferred)

```

$ wget https://github.com/facebookresearch/fastText/archive/v0.9.2.zip

$ unzip v0.9.2.zip

$ cd fastText-0.9.2

$ make

```

This will produce object files for all the classes as well as the main binary `fasttext`.

If you do not plan on using the default system-wide compiler, update the two macros defined at the beginning of the Makefile (CC and INCLUDES).

### Building fastText using cmake

For now this is not part of a release, so you will need to clone the master branch.

```

$ git clone https://github.com/facebookresearch/fastText.git

$ cd fastText

$ mkdir build && cd build && cmake ..

$ make && make install

```

This will create the fasttext binary and also all relevant libraries (shared, static, PIC).

### Building fastText for Python

For now this is not part of a release, so you will need to clone the master branch.

```

$ git clone https://github.com/facebookresearch/fastText.git

$ cd fastText

$ pip install .

```

For further information and introduction see python/README.md

## Example use cases

This library has two main use cases: word representation learning and text classification.

These were described in the two papers [1](#enriching-word-vectors-with-subword-information) and [2](#bag-of-tricks-for-efficient-text-classification).

### Word representation learning

In order to learn word vectors, as described in [1](#enriching-word-vectors-with-subword-information), do:

```

$ ./fasttext skipgram -input data.txt -output model

```

where `data.txt` is a training file containing `UTF-8` encoded text.

By default the word vectors will take into account character n-grams from 3 to 6 characters.

At the end of optimization the program will save two files: `model.bin` and `model.vec`.

`model.vec` is a text file containing the word vectors, one per line.

`model.bin` is a binary file containing the parameters of the model along with the dictionary and all hyper parameters.

The binary file can be used later to compute word vectors or to restart the optimization.

### Obtaining word vectors for out-of-vocabulary words

The previously trained model can be used to compute word vectors for out-of-vocabulary words.

Provided you have a text file `queries.txt` containing words for which you want to compute vectors, use the following command:

```

$ ./fasttext print-word-vectors model.bin < queries.txt

```

This will output word vectors to the standard output, one vector per line.

This can also be used with pipes:

```

$ cat queries.txt | ./fasttext print-word-vectors model.bin

```

See the provided scripts for an example. For instance, running:

```

$ ./word-vector-example.sh

```

will compile the code, download data, compute word vectors and evaluate them on the rare words similarity dataset RW [Thang et al. 2013].

### Text classification

This library can also be used to train supervised text classifiers, for instance for sentiment analysis.

In order to train a text classifier using the method described in [2](#bag-of-tricks-for-efficient-text-classification), use:

```

$ ./fasttext supervised -input train.txt -output model

```

where `train.txt` is a text file containing a training sentence per line along with the labels.

By default, we assume that labels are words that are prefixed by the string `__label__`.

This will output two files: `model.bin` and `model.vec`.

Once the model was trained, you can evaluate it by computing the precision and recall at k (P@k and R@k) on a test set using:

```

$ ./fasttext test model.bin test.txt k

```

The argument `k` is optional, and is equal to `1` by default.

In order to obtain the k most likely labels for a piece of text, use:

```

$ ./fasttext predict model.bin test.txt k

```

or use `predict-prob` to also get the probability for each label

```

$ ./fasttext predict-prob model.bin test.txt k

```

where `test.txt` contains a piece of text to classify per line.

Doing so will print to the standard output the k most likely labels for each line.

The argument `k` is optional, and equal to `1` by default.

See `classification-example.sh` for an example use case.

In order to reproduce results from the paper [2](#bag-of-tricks-for-efficient-text-classification), run `classification-results.sh`, this will download all the datasets and reproduce the results from Table 1.

If you want to compute vector representations of sentences or paragraphs, please use:

```

$ ./fasttext print-sentence-vectors model.bin < text.txt

```

This assumes that the `text.txt` file contains the paragraphs that you want to get vectors for.

The program will output one vector representation per line in the file.

You can also quantize a supervised model to reduce its memory usage with the following command:

```

$ ./fasttext quantize -output model

```

This will create a `.ftz` file with a smaller memory footprint. All the standard functionality, like `test` or `predict` work the same way on the quantized models:

```

$ ./fasttext test model.ftz test.txt

```

The quantization procedure follows the steps described in [3](#fasttextzip-compressing-text-classification-models). You can

run the script `quantization-example.sh` for an example.

## Full documentation

Invoke a command without arguments to list available arguments and their default values:

```

$ ./fasttext supervised

Empty input or output path.

The following arguments are mandatory:

-input training file path

-output output file path

The following arguments are optional:

-verbose verbosity level [2]

The following arguments for the dictionary are optional:

-minCount minimal number of word occurrences [1]

-minCountLabel minimal number of label occurrences [0]

-wordNgrams max length of word ngram [1]

-bucket number of buckets [2000000]

-minn min length of char ngram [0]

-maxn max length of char ngram [0]

-t sampling threshold [0.0001]

-label labels prefix [__label__]

The following arguments for training are optional:

-lr learning rate [0.1]

-lrUpdateRate change the rate of updates for the learning rate [100]

-dim size of word vectors [100]

-ws size of the context window [5]

-epoch number of epochs [5]

-neg number of negatives sampled [5]

-loss loss function {ns, hs, softmax} [softmax]

-thread number of threads [12]

-pretrainedVectors pretrained word vectors for supervised learning []

-saveOutput whether output params should be saved [0]

The following arguments for quantization are optional:

-cutoff number of words and ngrams to retain [0]

-retrain finetune embeddings if a cutoff is applied [0]

-qnorm quantizing the norm separately [0]

-qout quantizing the classifier [0]

-dsub size of each sub-vector [2]

```

Defaults may vary by mode. (Word-representation modes `skipgram` and `cbow` use a default `-minCount` of 5.)

## References

Please cite [1](#enriching-word-vectors-with-subword-information) if using this code for learning word representations or [2](#bag-of-tricks-for-efficient-text-classification) if using for text classification.

### Enriching Word Vectors with Subword Information

[1] P. Bojanowski\*, E. Grave\*, A. Joulin, T. Mikolov, [*Enriching Word Vectors with Subword Information*](https://arxiv.org/abs/1607.04606)

```

@article{bojanowski2017enriching,

title={Enriching Word Vectors with Subword Information},

author={Bojanowski, Piotr and Grave, Edouard and Joulin, Armand and Mikolov, Tomas},

journal={Transactions of the Association for Computational Linguistics},

volume={5},

year={2017},

issn={2307-387X},

pages={135--146}

}

```

### Bag of Tricks for Efficient Text Classification

[2] A. Joulin, E. Grave, P. Bojanowski, T. Mikolov, [*Bag of Tricks for Efficient Text Classification*](https://arxiv.org/abs/1607.01759)

```

@InProceedings{joulin2017bag,

title={Bag of Tricks for Efficient Text Classification},

author={Joulin, Armand and Grave, Edouard and Bojanowski, Piotr and Mikolov, Tomas},

booktitle={Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 2, Short Papers},

month={April},

year={2017},

publisher={Association for Computational Linguistics},

pages={427--431},

}

```

### FastText.zip: Compressing text classification models

[3] A. Joulin, E. Grave, P. Bojanowski, M. Douze, H. Jégou, T. Mikolov, [*FastText.zip: Compressing text classification models*](https://arxiv.org/abs/1612.03651)

```

@article{joulin2016fasttext,

title={FastText.zip: Compressing text classification models},

author={Joulin, Armand and Grave, Edouard and Bojanowski, Piotr and Douze, Matthijs and J{\'e}gou, H{\'e}rve and Mikolov, Tomas},

journal={arXiv preprint arXiv:1612.03651},

year={2016}

}

```

(\* These authors contributed equally.)

## Join the fastText community

* Facebook page: https://www.facebook.com/groups/1174547215919768

* Google group: https://groups.google.com/forum/#!forum/fasttext-library

* Contact: [egrave@fb.com](mailto:egrave@fb.com), [bojanowski@fb.com](mailto:bojanowski@fb.com), [ajoulin@fb.com](mailto:ajoulin@fb.com), [tmikolov@fb.com](mailto:tmikolov@fb.com)

See the CONTRIBUTING file for information about how to help out.

## License

fastText is MIT-licensed.

��������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������fastText-0.9.2/docs/��������������������������������������������������������������������������������0000755�0001750�0000176�00000000000�13651775021�013616� 5����������������������������������������������������������������������������������������������������ustar �kenhys��������������������������docker�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������fastText-0.9.2/docs/python-module.md����������������������������������������������������������������0000644�0001750�0000176�00000032575�13651775021�016760� 0����������������������������������������������������������������������������������������������������ustar �kenhys��������������������������docker�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������---

id: python-module

title: Python module

---

In this document we present how to use fastText in python.

## Table of contents

* [Requirements](#requirements)

* [Installation](#installation)

* [Usage overview](#usage-overview)

* [Word representation model](#word-representation-model)

* [Text classification model](#text-classification-model)

* [IMPORTANT: Preprocessing data / encoding conventions](#important-preprocessing-data-encoding-conventions)

* [More examples](#more-examples)

* [API](#api)

* [`train_unsupervised` parameters](#train_unsupervised-parameters)

* [`train_supervised` parameters](#train_supervised-parameters)

* [`model` object](#model-object)

# Requirements

[fastText](https://fasttext.cc/) builds on modern Mac OS and Linux distributions.

Since it uses C\++11 features, it requires a compiler with good C++11 support. You will need [Python](https://www.python.org/) (version 2.7 or ≥ 3.4), [NumPy](http://www.numpy.org/) & [SciPy](https://www.scipy.org/) and [pybind11](https://github.com/pybind/pybind11).

# Installation

To install the latest release, you can do :

```bash

$ pip install fasttext

```

or, to get the latest development version of fasttext, you can install from our github repository :

```bash

$ git clone https://github.com/facebookresearch/fastText.git

$ cd fastText

$ sudo pip install .

$ # or :

$ sudo python setup.py install

```

# Usage overview

## Word representation model

In order to learn word vectors, as [described here](/docs/en/references.html#enriching-word-vectors-with-subword-information), we can use `fasttext.train_unsupervised` function like this:

```py

import fasttext

# Skipgram model :

model = fasttext.train_unsupervised('data.txt', model='skipgram')

# or, cbow model :

model = fasttext.train_unsupervised('data.txt', model='cbow')

```

where `data.txt` is a training file containing utf-8 encoded text.

The returned `model` object represents your learned model, and you can use it to retrieve information.

```py

print(model.words) # list of words in dictionary

print(model['king']) # get the vector of the word 'king'

```

### Saving and loading a model object

You can save your trained model object by calling the function `save_model`.

```py

model.save_model("model_filename.bin")

```

and retrieve it later thanks to the function `load_model` :

```py

model = fasttext.load_model("model_filename.bin")

```

For more information about word representation usage of fasttext, you can refer to our [word representations tutorial](/docs/en/unsupervised-tutorial.html).

## Text classification model

In order to train a text classifier using the method [described here](/docs/en/references.html#bag-of-tricks-for-efficient-text-classification), we can use `fasttext.train_supervised` function like this:

```py

import fasttext

model = fasttext.train_supervised('data.train.txt')

```

where `data.train.txt` is a text file containing a training sentence per line along with the labels. By default, we assume that labels are words that are prefixed by the string `__label__`

Once the model is trained, we can retrieve the list of words and labels:

```py

print(model.words)

print(model.labels)

```

To evaluate our model by computing the precision at 1 (P@1) and the recall on a test set, we use the `test` function:

```py

def print_results(N, p, r):

print("N\t" + str(N))

print("P@{}\t{:.3f}".format(1, p))

print("R@{}\t{:.3f}".format(1, r))

print_results(*model.test('test.txt'))

```

We can also predict labels for a specific text :

```py

model.predict("Which baking dish is best to bake a banana bread ?")

```

By default, `predict` returns only one label : the one with the highest probability. You can also predict more than one label by specifying the parameter `k`:

```py

model.predict("Which baking dish is best to bake a banana bread ?", k=3)

```

If you want to predict more than one sentence you can pass an array of strings :

```py

model.predict(["Which baking dish is best to bake a banana bread ?", "Why not put knives in the dishwasher?"], k=3)

```

Of course, you can also save and load a model to/from a file as [in the word representation usage](#saving-and-loading-a-model-object).

For more information about text classification usage of fasttext, you can refer to our [text classification tutorial](/docs/en/supervised-tutorial.html).

### Compress model files with quantization

When you want to save a supervised model file, fastText can compress it in order to have a much smaller model file by sacrificing only a little bit performance.

```py

# with the previously trained `model` object, call :

model.quantize(input='data.train.txt', retrain=True)

# then display results and save the new model :

print_results(*model.test(valid_data))

model.save_model("model_filename.ftz")

```

`model_filename.ftz` will have a much smaller size than `model_filename.bin`.

For further reading on quantization, you can refer to [this paragraph from our blog post](/blog/2017/10/02/blog-post.html#model-compression).

## IMPORTANT: Preprocessing data / encoding conventions

In general it is important to properly preprocess your data. In particular our example scripts in the [root folder](https://github.com/facebookresearch/fastText) do this.

fastText assumes UTF-8 encoded text. All text must be [unicode for Python2](https://docs.python.org/2/library/functions.html#unicode) and [str for Python3](https://docs.python.org/3.5/library/stdtypes.html#textseq). The passed text will be [encoded as UTF-8 by pybind11](https://pybind11.readthedocs.io/en/master/advanced/cast/strings.html?highlight=utf-8#strings-bytes-and-unicode-conversions) before passed to the fastText C++ library. This means it is important to use UTF-8 encoded text when building a model. On Unix-like systems you can convert text using [iconv](https://en.wikipedia.org/wiki/Iconv).

fastText will tokenize (split text into pieces) based on the following ASCII characters (bytes). In particular, it is not aware of UTF-8 whitespace. We advice the user to convert UTF-8 whitespace / word boundaries into one of the following symbols as appropiate.

* space

* tab

* vertical tab

* carriage return

* formfeed

* the null character

The newline character is used to delimit lines of text. In particular, the EOS token is appended to a line of text if a newline character is encountered. The only exception is if the number of tokens exceeds the MAX\_LINE\_SIZE constant as defined in the [Dictionary header](https://github.com/facebookresearch/fastText/blob/master/src/dictionary.h). This means if you have text that is not separate by newlines, such as the [fil9 dataset](http://mattmahoney.net/dc/textdata), it will be broken into chunks with MAX\_LINE\_SIZE of tokens and the EOS token is not appended.

The length of a token is the number of UTF-8 characters by considering the [leading two bits of a byte](https://en.wikipedia.org/wiki/UTF-8#Description) to identify [subsequent bytes of a multi-byte sequence](https://github.com/facebookresearch/fastText/blob/master/src/dictionary.cc). Knowing this is especially important when choosing the minimum and maximum length of subwords. Further, the EOS token (as specified in the [Dictionary header](https://github.com/facebookresearch/fastText/blob/master/src/dictionary.h)) is considered a character and will not be broken into subwords.

## More examples

In order to have a better knowledge of fastText models, please consider the main [README](https://github.com/facebookresearch/fastText/blob/master/README.md) and in particular [the tutorials on our website](https://fasttext.cc/docs/en/supervised-tutorial.html).

You can find further python examples in [the doc folder](https://github.com/facebookresearch/fastText/tree/master/python/doc/examples).

As with any package you can get help on any Python function using the help function.

For example

```

+>>> import fasttext

+>>> help(fasttext.FastText)

Help on module fasttext.FastText in fasttext:

NAME

fasttext.FastText

DESCRIPTION

# Copyright (c) 2017-present, Facebook, Inc.

# All rights reserved.

#

# This source code is licensed under the MIT license found in the

# LICENSE file in the root directory of this source tree.

FUNCTIONS

load_model(path)

Load a model given a filepath and return a model object.

tokenize(text)

Given a string of text, tokenize it and return a list of tokens

[...]

```

# API

## `train_unsupervised` parameters

```python

input # training file path (required)

model # unsupervised fasttext model {cbow, skipgram} [skipgram]

lr # learning rate [0.05]

dim # size of word vectors [100]

ws # size of the context window [5]

epoch # number of epochs [5]

minCount # minimal number of word occurences [5]

minn # min length of char ngram [3]

maxn # max length of char ngram [6]

neg # number of negatives sampled [5]

wordNgrams # max length of word ngram [1]

loss # loss function {ns, hs, softmax, ova} [ns]

bucket # number of buckets [2000000]

thread # number of threads [number of cpus]

lrUpdateRate # change the rate of updates for the learning rate [100]

t # sampling threshold [0.0001]

verbose # verbose [2]

```

## `train_supervised` parameters

```python

input # training file path (required)

lr # learning rate [0.1]

dim # size of word vectors [100]

ws # size of the context window [5]

epoch # number of epochs [5]

minCount # minimal number of word occurences [1]

minCountLabel # minimal number of label occurences [1]

minn # min length of char ngram [0]

maxn # max length of char ngram [0]

neg # number of negatives sampled [5]

wordNgrams # max length of word ngram [1]

loss # loss function {ns, hs, softmax, ova} [softmax]

bucket # number of buckets [2000000]

thread # number of threads [number of cpus]

lrUpdateRate # change the rate of updates for the learning rate [100]

t # sampling threshold [0.0001]

label # label prefix ['__label__']

verbose # verbose [2]

pretrainedVectors # pretrained word vectors (.vec file) for supervised learning []

```

## `model` object

`train_supervised`, `train_unsupervised` and `load_model` functions return an instance of `_FastText` class, that we generaly name `model` object.

This object exposes those training arguments as properties : `lr`, `dim`, `ws`, `epoch`, `minCount`, `minCountLabel`, `minn`, `maxn`, `neg`, `wordNgrams`, `loss`, `bucket`, `thread`, `lrUpdateRate`, `t`, `label`, `verbose`, `pretrainedVectors`. So `model.wordNgrams` will give you the max length of word ngram used for training this model.

In addition, the object exposes several functions :

```python

get_dimension # Get the dimension (size) of a lookup vector (hidden layer).

# This is equivalent to `dim` property.

get_input_vector # Given an index, get the corresponding vector of the Input Matrix.

get_input_matrix # Get a copy of the full input matrix of a Model.

get_labels # Get the entire list of labels of the dictionary

# This is equivalent to `labels` property.

get_line # Split a line of text into words and labels.

get_output_matrix # Get a copy of the full output matrix of a Model.

get_sentence_vector # Given a string, get a single vector represenation. This function

# assumes to be given a single line of text. We split words on

# whitespace (space, newline, tab, vertical tab) and the control

# characters carriage return, formfeed and the null character.

get_subword_id # Given a subword, return the index (within input matrix) it hashes to.

get_subwords # Given a word, get the subwords and their indicies.

get_word_id # Given a word, get the word id within the dictionary.

get_word_vector # Get the vector representation of word.

get_words # Get the entire list of words of the dictionary

# This is equivalent to `words` property.

is_quantized # whether the model has been quantized

predict # Given a string, get a list of labels and a list of corresponding probabilities.

quantize # Quantize the model reducing the size of the model and it's memory footprint.

save_model # Save the model to the given path

test # Evaluate supervised model using file given by path

test_label # Return the precision and recall score for each label.

```

The properties `words`, `labels` return the words and labels from the dictionary :

```py

model.words # equivalent to model.get_words()

model.labels # equivalent to model.get_labels()

```

The object overrides `__getitem__` and `__contains__` functions in order to return the representation of a word and to check if a word is in the vocabulary.

```py

model['king'] # equivalent to model.get_word_vector('king')

'king' in model # equivalent to `'king' in model.get_words()`

```

�����������������������������������������������������������������������������������������������������������������������������������fastText-0.9.2/docs/english-vectors.md��������������������������������������������������������������0000644�0001750�0000176�00000004764�13651775021�017267� 0����������������������������������������������������������������������������������������������������ustar �kenhys��������������������������docker�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������---

id: english-vectors

title: English word vectors

---

This page gathers several pre-trained word vectors trained using fastText.

### Download pre-trained word vectors

Pre-trained word vectors learned on different sources can be downloaded below:

1. [wiki-news-300d-1M.vec.zip](https://dl.fbaipublicfiles.com/fasttext/vectors-english/wiki-news-300d-1M.vec.zip): 1 million word vectors trained on Wikipedia 2017, UMBC webbase corpus and statmt.org news dataset (16B tokens).

2. [wiki-news-300d-1M-subword.vec.zip](https://dl.fbaipublicfiles.com/fasttext/vectors-english/wiki-news-300d-1M-subword.vec.zip): 1 million word vectors trained with subword infomation on Wikipedia 2017, UMBC webbase corpus and statmt.org news dataset (16B tokens).

3. [crawl-300d-2M.vec.zip](https://dl.fbaipublicfiles.com/fasttext/vectors-english/crawl-300d-2M.vec.zip): 2 million word vectors trained on Common Crawl (600B tokens).

4. [crawl-300d-2M-subword.zip](https://dl.fbaipublicfiles.com/fasttext/vectors-english/crawl-300d-2M-subword.zip): 2 million word vectors trained with subword information on Common Crawl (600B tokens).

### Format

The first line of the file contains the number of words in the vocabulary and the size of the vectors.

Each line contains a word followed by its vectors, like in the default fastText text format.

Each value is space separated. Words are ordered by descending frequency.

These text models can easily be loaded in Python using the following code:

```python

import io

def load_vectors(fname):

fin = io.open(fname, 'r', encoding='utf-8', newline='\n', errors='ignore')

n, d = map(int, fin.readline().split())

data = {}

for line in fin:

tokens = line.rstrip().split(' ')

data[tokens[0]] = map(float, tokens[1:])

return data

```

### License

These word vectors are distributed under the [*Creative Commons Attribution-Share-Alike License 3.0*](https://creativecommons.org/licenses/by-sa/3.0/).

### References

If you use these word vectors, please cite the following paper:

T. Mikolov, E. Grave, P. Bojanowski, C. Puhrsch, A. Joulin. [*Advances in Pre-Training Distributed Word Representations*](https://arxiv.org/abs/1712.09405)

```markup

@inproceedings{mikolov2018advances,

title={Advances in Pre-Training Distributed Word Representations},

author={Mikolov, Tomas and Grave, Edouard and Bojanowski, Piotr and Puhrsch, Christian and Joulin, Armand},

booktitle={Proceedings of the International Conference on Language Resources and Evaluation (LREC 2018)},

year={2018}

}

```

������������fastText-0.9.2/docs/cheatsheet.md�������������������������������������������������������������������0000644�0001750�0000176�00000003460�13651775021�016260� 0����������������������������������������������������������������������������������������������������ustar �kenhys��������������������������docker�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������---

id: cheatsheet

title: Cheatsheet

---

## Word representation learning

In order to learn word vectors do:

```bash

$ ./fasttext skipgram -input data.txt -output model

```

## Obtaining word vectors

Print word vectors for a text file `queries.txt` containing words.

```bash

$ ./fasttext print-word-vectors model.bin < queries.txt

```

## Text classification

In order to train a text classifier do:

```bash

$ ./fasttext supervised -input train.txt -output model

```

Once the model was trained, you can evaluate it by computing the precision and recall at k (P@k and R@k) on a test set using:

```bash

$ ./fasttext test model.bin test.txt 1

```

In order to obtain the k most likely labels for a piece of text, use:

```bash

$ ./fasttext predict model.bin test.txt k

```

In order to obtain the k most likely labels and their associated probabilities for a piece of text, use:

```bash

$ ./fasttext predict-prob model.bin test.txt k

```

If you want to compute vector representations of sentences or paragraphs, please use:

```bash

$ ./fasttext print-sentence-vectors model.bin < text.txt

```

## Quantization

In order to create a `.ftz` file with a smaller memory footprint do:

```bash

$ ./fasttext quantize -output model

```

All other commands such as test also work with this model

```bash

$ ./fasttext test model.ftz test.txt

```

## Autotune

Activate hyperparameter optimization with `-autotune-validation` argument:

```bash

$ ./fasttext supervised -input train.txt -output model -autotune-validation valid.txt

```

Set timeout (in seconds):

```bash

$ ./fasttext supervised -input train.txt -output model -autotune-validation valid.txt -autotune-duration 600

```

Constrain the final model size:

```bash

$ ./fasttext supervised -input train.txt -output model -autotune-validation valid.txt -autotune-modelsize 2M

```

����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������fastText-0.9.2/docs/unsupervised-tutorials.md�������������������������������������������������������0000644�0001750�0000176�00000047263�13651775021�020734� 0����������������������������������������������������������������������������������������������������ustar �kenhys��������������������������docker�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������---

id: unsupervised-tutorial

title: Word representations

---

A popular idea in modern machine learning is to represent words by vectors. These vectors capture hidden information about a language, like word analogies or semantic. It is also used to improve performance of text classifiers.

In this tutorial, we show how to build these word vectors with the fastText tool. To download and install fastText, follow the first steps of [the tutorial on text classification](https://fasttext.cc/docs/en/supervised-tutorial.html).

## Getting the data

In order to compute word vectors, you need a large text corpus. Depending on the corpus, the word vectors will capture different information. In this tutorial, we focus on Wikipedia's articles but other sources could be considered, like news or Webcrawl (more examples [here](http://statmt.org/)). To download a raw dump of Wikipedia, run the following command:

```bash

wget https://dumps.wikimedia.org/enwiki/latest/enwiki-latest-pages-articles.xml.bz2

```

Downloading the Wikipedia corpus takes some time. Instead, lets restrict our study to the first 1 billion bytes of English Wikipedia. They can be found on Matt Mahoney's [website](http://mattmahoney.net/):

```bash

$ mkdir data

$ wget -c http://mattmahoney.net/dc/enwik9.zip -P data

$ unzip data/enwik9.zip -d data

```

A raw Wikipedia dump contains a lot of HTML / XML data. We pre-process it with the wikifil.pl script bundled with fastText (this script was originally developed by Matt Mahoney, and can be found on his [website](http://mattmahoney.net/)).

```bash

$ perl wikifil.pl data/enwik9 > data/fil9

```

We can check the file by running the following command:

```bash

$ head -c 80 data/fil9

anarchism originated as a term of abuse first used against early working class

```

The text is nicely pre-processed and can be used to learn our word vectors.

## Training word vectors

Learning word vectors on this data can now be achieved with a single command:

```bash

$ mkdir result

$ ./fasttext skipgram -input data/fil9 -output result/fil9

```

To decompose this command line: ./fastext calls the binary fastText executable (see how to install fastText [here](https://fasttext.cc/docs/en/support.html)) with the 'skipgram' model (it can also be 'cbow'). We then specify the requires options '-input' for the location of the data and '-output' for the location where the word representations will be saved.

While fastText is running, the progress and estimated time to completion is shown on your screen. Once the program finishes, there should be two files in the result directory:

```bash

$ ls -l result

-rw-r-r-- 1 bojanowski 1876110778 978480850 Dec 20 11:01 fil9.bin

-rw-r-r-- 1 bojanowski 1876110778 190004182 Dec 20 11:01 fil9.vec

```

The `fil9.bin` file is a binary file that stores the whole fastText model and can be subsequently loaded. The `fil9.vec` file is a text file that contains the word vectors, one per line for each word in the vocabulary:

```bash

$ head -n 4 result/fil9.vec

218316 100

the -0.10363 -0.063669 0.032436 -0.040798 0.53749 0.00097867 0.10083 0.24829 ...

of -0.0083724 0.0059414 -0.046618 -0.072735 0.83007 0.038895 -0.13634 0.60063 ...

one 0.32731 0.044409 -0.46484 0.14716 0.7431 0.24684 -0.11301 0.51721 0.73262 ...

```

The first line is a header containing the number of words and the dimensionality of the vectors. The subsequent lines are the word vectors for all words in the vocabulary, sorted by decreasing frequency.

Learning word vectors on this data can now be achieved with a single command:

```py

>>> import fasttext

>>> model = fasttext.train_unsupervised('data/fil9')

```

While fastText is running, the progress and estimated time to completion is shown on your screen. Once the training finishes, `model` variable contains information on the trained model, and can be used for querying:

```py

>>> model.words

[u'the', u'of', u'one', u'zero', u'and', u'in', u'two', u'a', u'nine', u'to', u'is', ...

```

It returns all words in the vocabulary, sorted by decreasing frequency. We can get the word vector by:

```py

>>> model.get_word_vector("the")

array([-0.03087516, 0.09221972, 0.17660329, 0.17308897, 0.12863874,

0.13912526, -0.09851588, 0.00739991, 0.37038437, -0.00845221,

...

-0.21184735, -0.05048715, -0.34571868, 0.23765688, 0.23726143],

dtype=float32)

```

We can save this model on disk as a binary file:

```py

>>> model.save_model("result/fil9.bin")

```

and reload it later instead of training again:

```py

$ python

>>> import fasttext

>>> model = fasttext.load_model("result/fil9.bin")

```

## Advanced readers: skipgram versus cbow

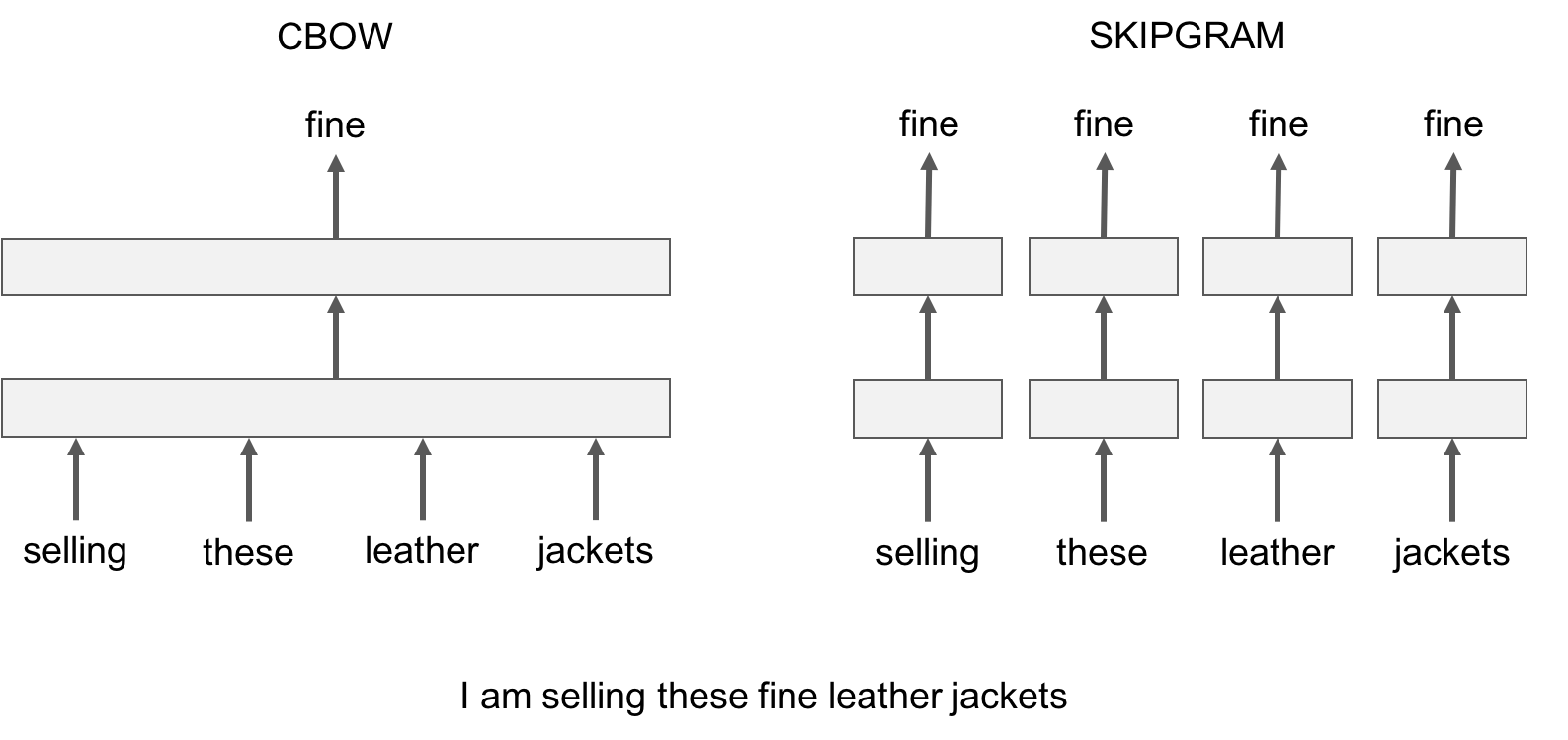

fastText provides two models for computing word representations: skipgram and cbow ('**c**ontinuous-**b**ag-**o**f-**w**ords').

The skipgram model learns to predict a target word thanks to a nearby word. On the other hand, the cbow model predicts the target word according to its context. The context is represented as a bag of the words contained in a fixed size window around the target word.

Let us illustrate this difference with an example: given the sentence *'Poets have been mysteriously silent on the subject of cheese'* and the target word '*silent*', a skipgram model tries to predict the target using a random close-by word, like '*subject' *or* '*mysteriously*'**. *The cbow model takes all the words in a surrounding window, like {*been, *mysteriously*, on, the*}, and uses the sum of their vectors to predict the target. The figure below summarizes this difference with another example.

To train a cbow model with fastText, you run the following command:

```bash

./fasttext cbow -input data/fil9 -output result/fil9

```

```py

>>> import fasttext

>>> model = fasttext.train_unsupervised('data/fil9', "cbow")

```

In practice, we observe that skipgram models works better with subword information than cbow.

## Advanced readers: playing with the parameters

So far, we run fastText with the default parameters, but depending on the data, these parameters may not be optimal. Let us give an introduction to some of the key parameters for word vectors.

The most important parameters of the model are its dimension and the range of size for the subwords. The dimension (*dim*) controls the size of the vectors, the larger they are the more information they can capture but requires more data to be learned. But, if they are too large, they are harder and slower to train. By default, we use 100 dimensions, but any value in the 100-300 range is as popular. The subwords are all the substrings contained in a word between the minimum size (*minn*) and the maximal size (*maxn*). By default, we take all the subword between 3 and 6 characters, but other range could be more appropriate to different languages:

```bash

$ ./fasttext skipgram -input data/fil9 -output result/fil9 -minn 2 -maxn 5 -dim 300

```

```py

>>> import fasttext

>>> model = fasttext.train_unsupervised('data/fil9', minn=2, maxn=5, dim=300)

```

Depending on the quantity of data you have, you may want to change the parameters of the training. The *epoch* parameter controls how many times the model will loop over your data. By default, we loop over the dataset 5 times. If you dataset is extremely massive, you may want to loop over it less often. Another important parameter is the learning rate -*lr*. The higher the learning rate is, the faster the model converge to a solution but at the risk of overfitting to the dataset. The default value is 0.05 which is a good compromise. If you want to play with it we suggest to stay in the range of [0.01, 1]:

```bash

$ ./fasttext skipgram -input data/fil9 -output result/fil9 -epoch 1 -lr 0.5

```

```py

>>> import fasttext

>>> model = fasttext.train_unsupervised('data/fil9', epoch=1, lr=0.5)

```

Finally , fastText is multi-threaded and uses 12 threads by default. If you have less CPU cores (say 4), you can easily set the number of threads using the *thread* flag:

```bash

$ ./fasttext skipgram -input data/fil9 -output result/fil9 -thread 4

```

```py

>>> import fasttext

>>> model = fasttext.train_unsupervised('data/fil9', thread=4)

```

## Printing word vectors

Searching and printing word vectors directly from the `fil9.vec` file is cumbersome. Fortunately, there is a `print-word-vectors` functionality in fastText.

For example, we can print the word vectors of words *asparagus,* *pidgey* and *yellow* with the following command:

```bash

$ echo "asparagus pidgey yellow" | ./fasttext print-word-vectors result/fil9.bin

asparagus 0.46826 -0.20187 -0.29122 -0.17918 0.31289 -0.31679 0.17828 -0.04418 ...

pidgey -0.16065 -0.45867 0.10565 0.036952 -0.11482 0.030053 0.12115 0.39725 ...

yellow -0.39965 -0.41068 0.067086 -0.034611 0.15246 -0.12208 -0.040719 -0.30155 ...

```

```py

>>> [model.get_word_vector(x) for x in ["asparagus", "pidgey", "yellow"]]

[array([-0.25751096, -0.18716481, 0.06921121, 0.06455903, 0.29168844,

0.15426874, -0.33448914, -0.427215 , 0.7813013 , -0.10600132,

...

0.37090245, 0.39266172, -0.4555302 , 0.27452755, 0.00467369],

dtype=float32),

array([-0.20613593, -0.25325796, -0.2422259 , -0.21067499, 0.32879013,

0.7269511 , 0.3782259 , 0.11274897, 0.246764 , -0.6423613 ,

...

0.46302193, 0.2530962 , -0.35795924, 0.5755718 , 0.09843876],

dtype=float32),

array([-0.304823 , 0.2543754 , -0.2198013 , -0.25421786, 0.11219151,

0.38286993, -0.22636674, -0.54023844, 0.41095474, -0.3505803 ,

...

0.54788435, 0.36740595, -0.5678512 , 0.07523401, -0.08701935],

dtype=float32)]

```

A nice feature is that you can also query for words that did not appear in your data! Indeed words are represented by the sum of its substrings. As long as the unknown word is made of known substrings, there is a representation of it!

As an example let's try with a misspelled word:

```bash

$ echo "enviroment" | ./fasttext print-word-vectors result/fil9.bin

```

```py

>>> model.get_word_vector("enviroment")

```

You still get a word vector for it! But how good it is? Let's find out in the next sections!

## Nearest neighbor queries

A simple way to check the quality of a word vector is to look at its nearest neighbors. This give an intuition of the type of semantic information the vectors are able to capture.

This can be achieved with the nearest neighbor (*nn*) functionality. For example, we can query the 10 nearest neighbors of a word by running the following command:

```bash

$ ./fasttext nn result/fil9.bin

Pre-computing word vectors... done.

```

Then we are prompted to type our query word, let us try *asparagus* :

```bash

Query word? asparagus

beetroot 0.812384

tomato 0.806688

horseradish 0.805928

spinach 0.801483

licorice 0.791697

lingonberries 0.781507

asparagales 0.780756

lingonberry 0.778534

celery 0.774529

beets 0.773984

```

```py

>>> model.get_nearest_neighbors('asparagus')

[(0.812384, u'beetroot'), (0.806688, u'tomato'), (0.805928, u'horseradish'), (0.801483, u'spinach'), (0.791697, u'licorice'), (0.781507, u'lingonberries'), (0.780756, u'asparagales'), (0.778534, u'lingonberry'), (0.774529, u'celery'), (0.773984, u'beets')]

```

Nice! It seems that vegetable vectors are similar. Note that the nearest neighbor is the word *asparagus* itself, this means that this word appeared in the dataset. What about pokemons?

```bash

Query word? pidgey

pidgeot 0.891801

pidgeotto 0.885109

pidge 0.884739

pidgeon 0.787351

pok 0.781068

pikachu 0.758688

charizard 0.749403

squirtle 0.742582

beedrill 0.741579

charmeleon 0.733625

```

```py

>>> model.get_nearest_neighbors('pidgey')

[(0.891801, u'pidgeot'), (0.885109, u'pidgeotto'), (0.884739, u'pidge'), (0.787351, u'pidgeon'), (0.781068, u'pok'), (0.758688, u'pikachu'), (0.749403, u'charizard'), (0.742582, u'squirtle'), (0.741579, u'beedrill'), (0.733625, u'charmeleon')]

```

Different evolution of the same Pokemon have close-by vectors! But what about our misspelled word, is its vector close to anything reasonable? Let s find out:

```bash

Query word? enviroment

enviromental 0.907951

environ 0.87146

enviro 0.855381

environs 0.803349

environnement 0.772682

enviromission 0.761168

realclimate 0.716746

environment 0.702706

acclimatation 0.697196

ecotourism 0.697081

```

```py

>>> model.get_nearest_neighbors('enviroment')

[(0.907951, u'enviromental'), (0.87146, u'environ'), (0.855381, u'enviro'), (0.803349, u'environs'), (0.772682, u'environnement'), (0.761168, u'enviromission'), (0.716746, u'realclimate'), (0.702706, u'environment'), (0.697196, u'acclimatation'), (0.697081, u'ecotourism')]

```

Thanks to the information contained within the word, the vector of our misspelled word matches to reasonable words! It is not perfect but the main information has been captured.

## Advanced reader: measure of similarity

In order to find nearest neighbors, we need to compute a similarity score between words. Our words are represented by continuous word vectors and we can thus apply simple similarities to them. In particular we use the cosine of the angles between two vectors. This similarity is computed for all words in the vocabulary, and the 10 most similar words are shown. Of course, if the word appears in the vocabulary, it will appear on top, with a similarity of 1.

## Word analogies

In a similar spirit, one can play around with word analogies. For example, we can see if our model can guess what is to France, and what Berlin is to Germany.

This can be done with the *analogies* functionality. It takes a word triplet (like *Germany Berlin France*) and outputs the analogy:

```bash

$ ./fasttext analogies result/fil9.bin

Pre-computing word vectors... done.

Query triplet (A - B + C)? berlin germany france

paris 0.896462

bourges 0.768954

louveciennes 0.765569

toulouse 0.761916

valenciennes 0.760251

montpellier 0.752747

strasbourg 0.744487

meudon 0.74143

bordeaux 0.740635

pigneaux 0.736122

```

```py

>>> model.get_analogies("berlin", "germany", "france")

[(0.896462, u'paris'), (0.768954, u'bourges'), (0.765569, u'louveciennes'), (0.761916, u'toulouse'), (0.760251, u'valenciennes'), (0.752747, u'montpellier'), (0.744487, u'strasbourg'), (0.74143, u'meudon'), (0.740635, u'bordeaux'), (0.736122, u'pigneaux')]

```

The answer provided by our model is *Paris*, which is correct. Let's have a look at a less obvious example:

```bash

Query triplet (A - B + C)? psx sony nintendo

gamecube 0.803352

nintendogs 0.792646

playstation 0.77344

sega 0.772165

gameboy 0.767959

arcade 0.754774

playstationjapan 0.753473

gba 0.752909

dreamcast 0.74907

famicom 0.745298

```

```py

>>> model.get_analogies("psx", "sony", "nintendo")

[(0.803352, u'gamecube'), (0.792646, u'nintendogs'), (0.77344, u'playstation'), (0.772165, u'sega'), (0.767959, u'gameboy'), (0.754774, u'arcade'), (0.753473, u'playstationjapan'), (0.752909, u'gba'), (0.74907, u'dreamcast'), (0.745298, u'famicom')]

```

Our model considers that the *nintendo* analogy of a *psx* is the *gamecube*, which seems reasonable. Of course the quality of the analogies depend on the dataset used to train the model and one can only hope to cover fields only in the dataset.

## Importance of character n-grams

Using subword-level information is particularly interesting to build vectors for unknown words. For example, the word *gearshift* does not exist on Wikipedia but we can still query its closest existing words:

```bash

Query word? gearshift

gearing 0.790762

flywheels 0.779804

flywheel 0.777859

gears 0.776133

driveshafts 0.756345

driveshaft 0.755679

daisywheel 0.749998

wheelsets 0.748578

epicycles 0.744268

gearboxes 0.73986

```

```py

>>> model.get_nearest_neighbors('gearshift')

[(0.790762, u'gearing'), (0.779804, u'flywheels'), (0.777859, u'flywheel'), (0.776133, u'gears'), (0.756345, u'driveshafts'), (0.755679, u'driveshaft'), (0.749998, u'daisywheel'), (0.748578, u'wheelsets'), (0.744268, u'epicycles'), (0.73986, u'gearboxes')]

```

Most of the retrieved words share substantial substrings but a few are actually quite different, like *cogwheel*. You can try other words like *sunbathe* or *grandnieces*.

Now that we have seen the interest of subword information for unknown words, let's check how it compares to a model that does not use subword information. To train a model without subwords, just run the following command:

```bash

$ ./fasttext skipgram -input data/fil9 -output result/fil9-none -maxn 0

```

The results are saved in result/fil9-non.vec and result/fil9-non.bin.

```py

>>> model_without_subwords = fasttext.train_unsupervised('data/fil9', maxn=0)

```

To illustrate the difference, let us take an uncommon word in Wikipedia, like *accomodation* which is a misspelling of *accommodation**.* Here is the nearest neighbors obtained without subwords:

```bash

$ ./fasttext nn result/fil9-none.bin

Query word? accomodation

sunnhordland 0.775057

accomodations 0.769206

administrational 0.753011

laponian 0.752274

ammenities 0.750805

dachas 0.75026

vuosaari 0.74172

hostelling 0.739995

greenbelts 0.733975

asserbo 0.732465

```

```py

>>> model_without_subwords.get_nearest_neighbors('accomodation')

[(0.775057, u'sunnhordland'), (0.769206, u'accomodations'), (0.753011, u'administrational'), (0.752274, u'laponian'), (0.750805, u'ammenities'), (0.75026, u'dachas'), (0.74172, u'vuosaari'), (0.739995, u'hostelling'), (0.733975, u'greenbelts'), (0.732465, u'asserbo')]

```

The result does not make much sense, most of these words are unrelated. On the other hand, using subword information gives the following list of nearest neighbors:

```bash

Query word? accomodation

accomodations 0.96342

accommodation 0.942124

accommodations 0.915427

accommodative 0.847751

accommodating 0.794353

accomodated 0.740381

amenities 0.729746

catering 0.725975

accomodate 0.703177

hospitality 0.701426

```

```py

>>> model.get_nearest_neighbors('accomodation')

[(0.96342, u'accomodations'), (0.942124, u'accommodation'), (0.915427, u'accommodations'), (0.847751, u'accommodative'), (0.794353, u'accommodating'), (0.740381, u'accomodated'), (0.729746, u'amenities'), (0.725975, u'catering'), (0.703177, u'accomodate'), (0.701426, u'hospitality')]

```

The nearest neighbors capture different variation around the word *accommodation*. We also get semantically related words such as *amenities* or *catering*.

## Conclusion

In this tutorial, we show how to obtain word vectors from Wikipedia. This can be done for any language and we provide [pre-trained models](https://fasttext.cc/docs/en/pretrained-vectors.html) with the default setting for 294 of them.

���������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������fastText-0.9.2/docs/api.md��������������������������������������������������������������������������0000644�0001750�0000176�00000000165�13651775021�014713� 0����������������������������������������������������������������������������������������������������ustar �kenhys��������������������������docker�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������---

id: api

title:API

---

We automatically generate our [API documentation](/docs/en/html/index.html) with doxygen.

�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������fastText-0.9.2/docs/faqs.md�������������������������������������������������������������������������0000644�0001750�0000176�00000011226�13651775021�015074� 0����������������������������������������������������������������������������������������������������ustar �kenhys��������������������������docker�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������---

id: faqs

title:FAQ

---

## What is fastText? Are there tutorials?

FastText is a library for text classification and representation. It transforms text into continuous vectors that can later be used on any language related task. A few tutorials are available.

## How can I reduce the size of my fastText models?

fastText uses a hashtable for either word or character ngrams. The size of the hashtable directly impacts the size of a model. To reduce the size of the model, it is possible to reduce the size of this table with the option '-hash'. For example a good value is 20000. Another option that greatly impacts the size of a model is the size of the vectors (-dim). This dimension can be reduced to save space but this can significantly impact performance. If that still produce a model that is too big, one can further reduce the size of a trained model with the quantization option.

```bash

./fasttext quantize -output model

```

## What would be the best way to represent word phrases rather than words?

Currently the best approach to represent word phrases or sentence is to take a bag of words of word vectors. Additionally, for phrases like “New York”, preprocessing the data so that it becomes a single token “New_York” can greatly help.

## Why does fastText produce vectors even for unknown words?

One of the key features of fastText word representation is its ability to produce vectors for any words, even made-up ones.

Indeed, fastText word vectors are built from vectors of substrings of characters contained in it.

This allows to build vectors even for misspelled words or concatenation of words.

## Why is the hierarchical softmax slightly worse in performance than the full softmax?

The hierarchical softmax is an approximation of the full softmax loss that allows to train on large number of class efficiently. This is often at the cost of a few percent of accuracy.

Note also that this loss is thought for classes that are unbalanced, that is some classes are more frequent than others. If your dataset has a balanced number of examples per class, it is worth trying the negative sampling loss (-loss ns -neg 100).

However, negative sampling will still be very slow at test time, since the full softmax will be computed.

## Can we run fastText program on a GPU?

As of now, fastText only works on CPU.

Please note that one of the goal of fastText is to be an efficient CPU tool, allowing to train models without requiring a GPU.

## Can I use fastText with python? Or other languages?

[Python is officially supported](/docs/en/support.html#building-fasttext-python-module).

There are few unofficial wrappers for javascript, lua and other languages available on github.